Amazon CloudFront is AWS's Content Delivery Network (CDN) service, designed to deliver data, applications, videos, and APIs to users worldwide with high speed and low latency.

Setting up a basic CloudFront distribution is relatively straightforward, so let's focus on the more interesting aspects: When to use it, why, and how much you pay for it.

What Is Amazon CloudFront?

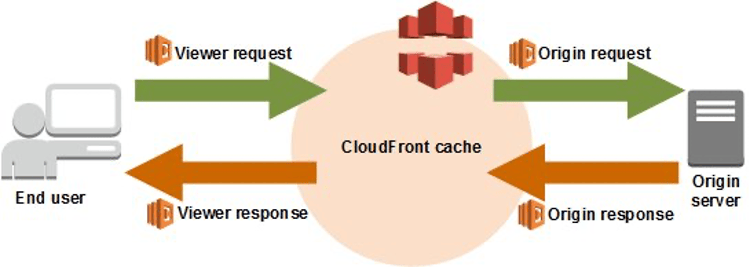

Amazon CloudFront is a global Content Delivery Network (CDN) service that securely delivers data, applications, videos, and APIs to users worldwide with low latency and high transfer speeds. Essentially it sits between your users and your backend (S3, Elastic Load Balancer, or anything really), and caches the response so your backend doesn't need to recalculate it for every request.

CloudFront operates in data centers called edge locations, which are like micro AWS regions located all over the world. The whole idea is that CloudFront caches the content at those edge locations, which are much closer to any user in the world than your region. That way you can use us-east-1 for your backend, and your users in Argentina will use the cache in Argentina, while your users in India use the cache in India, saving them the looong trip around the world to reach your servers. Let's dive more into that.

How Does Amazon CloudFront Deliver Content?

CloudFront's content delivery process happens in these steps:

User Request: When a user requests content to CloudFront, the request is automatically routed to the nearest edge location based on latency.

Edge Location Check: The edge location checks if the requested content is already cached there.

If cached: CloudFront delivers the content directly to the user.

If not cached: CloudFront forwards the request to the origin server (e.g., S3 bucket, HTTP server, or MediaPackage channel).

Origin Server Response: The origin server sends the content back to the CloudFront edge location.

Content Caching: CloudFront caches the content at the edge location for future requests.

User Delivery: The edge location delivers the content to the user.

Cache Management: The content remains in the edge location's cache based on cache control headers or CloudFront caching settings.

CloudFront sitting between end users and servers

CloudFront Pricing Structure

CloudFront's pricing is based on three main components:

Data Transfer Out to Internet: The cost of delivering content from CloudFront to users, varying by region and data volume.

HTTP/HTTPS Requests: Charges for the number of requests made by users, differing by request type (HTTP or HTTPS) and serving region.

Data Transfer Out to Origin: Charges for data transferred from CloudFront edge locations back to non-AWS origin servers.

AWS offers a free tier for CloudFront, which includes the first 1 TB of data transfer out per month and the first 10,000,000 HTTP or HTTPS requests per month.

CloudFront Price Classes

CloudFront offers three price classes, allowing you to optimize costs based on your geographic needs:

Price Class All: Includes all CloudFront edge locations worldwide.

Price Class 200: Includes most regions except South America and Australia/New Zealand.

Price Class 100: Includes only North America, Europe, and Israel.

The price class doesn't directly determine the cost; instead, it affects which edge locations are used to serve your content. If you pick the price class All, all edge locations are eligible, but if 100% of your users are in North America then only the North America edge locations will be actually used. In that case, changing the price class will not make a difference to how much you pay.

Keep in mind that choosing a more restrictive price class can lower costs but may impact performance for users in excluded regions.

Detailed Pricing Example

Let's consider a more realistic example of CloudFront pricing:

Application hosted in US East (N. Virginia)

Global user base

Monthly usage:

North America: 500 GB data transfer, 1,000,000 HTTPS requests

Europe: 300 GB data transfer, 600,000 HTTPS requests

Asia: 200 GB data transfer, 400,000 HTTPS requests

South America: 100 GB data transfer, 200,000 HTTPS requests

Here's how much you'd pay (excluding free tier):

North America:

Data transfer: 500 GB * $0.085/GB = $42.50

HTTPS requests: 1,000,000 * $0.0100/10,000 = $1.00

Subtotal: $43.50

Europe:

Data transfer: 300 GB * $0.085/GB = $25.50

HTTPS requests: 600,000 * $0.0100/10,000 = $0.60

Subtotal: $26.10

Asia:

Data transfer: 200 GB * $0.140/GB = $28.00

HTTPS requests: 400,000 * $0.0140/10,000 = $0.56

Subtotal: $28.56

South America:

Data transfer: 100 GB * $0.110/GB = $11.00

HTTPS requests: 200,000 * $0.0220/10,000 = $0.44

Subtotal: $11.44

Total monthly cost: $109.60

CloudFront in AWS Architectures: Use Cases, Benefits, and Considerations

CloudFront does a bit more than just caching though. It turns out that since all traffic goes through CloudFront in some way, it's a good place for several other things that you might want to also sit between the user and the server on every request. Here are a few use cases.

Static Website Hosting

One of the most obvious ways to use CloudFront is with Amazon S3 for static website hosting. So long as you don't need server-side processing, you can put your static files in S3, and serve them through CloudFront.

You'll need:

S3 bucket: Stores your static content (HTML, CSS, JavaScript, images)

CloudFront distribution: Serves as the content delivery layer

Route 53: Manages DNS (optional, but recommended for custom domain names)

In this setup, CloudFront acts as a caching layer and content delivery network for your S3-hosted website. Here's why this is my default for any static website:

Improved Performance: CloudFront's global edge network reduces latency for users worldwide.

Cost Optimization: By caching content at edge locations, CloudFront reduces the number of requests to your S3 bucket, potentially lowering S3 request costs.

SSL/TLS Support: CloudFront easily integrates with AWS Certificate Manager (ACM) to provide free SSL/TLS certificates, enabling HTTPS for your static website.

Custom Domain Support: You can use your own domain name with CloudFront.

API Acceleration

This is the other obvious use case. By caching API responses at edge locations, you can reduce latency and offload traffic from your backend servers.

You'll need:

API Gateway: Manages your API

Lambda or EC2: Hosts your code, which handles API logic

CloudFront: Serves as a caching layer

Route 53: Again, manages DNS

And you get:

Reduced Latency: API responses are cached closer to end-users.

Decreased Backend Load: Caching at the edge reduces the number of requests hitting your backend.

DDoS Protection: You can combine CloudFront with AWS Shield to get protected against DDoS on all requests.

Web Application Firewall: You can also use AWS Web Application Firewall (WAF) with CloudFront, to scan every request and block malicious ones.

A few things to keep in mind:

Cache-Control Headers: Properly set cache headers to control how long API responses are cached.

API Versioning: Implement versioning in your API to manage cache invalidation effectively.

Dynamic Content: Use Lambda@Edge for request manipulation or authorization at the edge.

Multi-Origin Architecture

CloudFront supports multiple origins, allowing you to route different types of content to different backend services. This is particularly useful for complex applications that combine static assets, dynamic content, and API calls.

Now, that sounds really boring, since you can do that easily with Route 53. But it also works very well for applications requiring high availability across regions, since CloudFront can be configured with origin groups to provide automatic failover.

You'll need:

Primary Region: EC2 or ECS hosting your application

Secondary Region: Replica of your application for failover

CloudFront: Distributes traffic and handles failover

Route 53: Manages DNS

You get:

Automatic Failover: Automatic failover to secondary region if primary fails

Global Performance: Users are always routed to the nearest available region (this is optional, you can also use only one region and keep the other as failover)

Simplified Management: Failover logic is handled at the CloudFront level. It's easier to do it there than at Route 53, trust me.

Architectural Considerations When Implementing CloudFront

Cache Strategy

This is a lot less simple than it looks like. A few things to keep in mind:

TTL (Time to Live) settings for different types of content

Cache-Control headers from your origin

Query string and cookie forwarding

Cache key composition

Whether you actually need CloudFront to cache the content

Security

A few things you can do with CloudFront to improve your security posture:

Use Origin Access Control (OAC) for S3 origins, so only your CloudFront distribution can access your S3 bucket

Implement AWS WAF rules to protect against common web exploits

Configure SSL/TLS certificates for HTTPS

Use signed URLs or signed cookies for restricted content

Monitoring and Optimization

You can't fine-tune what you can't see. Here's how to get some visibility into CloudFront:

Use CloudWatch metrics to track cache hit ratios, error rates, and latency

Analyze CloudFront logs for detailed request information

Set up alarms for unusual patterns or errors

Cost Management

How to pay less:

Choose appropriate price classes based on your audience

Monitor and optimize data transfer costs

Conclusion

Use CloudFront when you need at least one (any one) of these:

Caching

AWS WAF or AWS Shield

Rewriting queries

SSL certificate for a static website on an S3 bucket

Yes, use CloudFront even if you don't need caching. That's the conclusion.