Understanding How DynamoDB Scales

As you probably know, DynamoDB is a NoSQL database. It's a managed, serverless service, meaning you just create a Table (that's the equivalent of a Database in Postgres), and AWS manages the underlying nodes. It's highly available, meaning nodes are distributed across 3 AZs, so loss of an AZ doesn't bring down the service. The nodes aren't like an RDS Failover Replica though, instead data is partitioned (that's why Dynamo has a Partition Key!) and split across nodes, plus replicated on other nodes for availability and resilience. That means DynamoDB can scale horizontally!

There are two modes for DynamoDB, which affect how it scales and how you're billed:

DynamoDB Provisioned Mode

You define some capacity, and DynamoDB provisions that capacity for you. This is pretty similar to provisioning an Auto Scaling Group of EC2 instances, but imagine the size of the instance is fixed, and it's one group for reads and another one for writes. Here's how that capacity translates into actual read and write operations.

Capacity in Provisioned Mode

Capacity is provisioned separately for reads and writes, and it's measured in Capacity Units.

1 Read Capacity Unit (RCU) is equivalent to 1 strongly consistent read of up to 4 KB, per second. Eventually consistent reads consume half that capacity. Reads over 4 KB consume 1 RCU (1/2 for eventually consistent) per 4 KB, rounded up. That means if you have 5 RCUs, you can perform 10 eventually consistent reads every second, or 2 strongly consistent reads for 7 KB of data each (remember it's rounded up) plus 1 strongly consistent read for 1 KB of data (again, it's rounded up).

Write Capacity Units (WCU) work the same, but for writes. 1 WCU = 1 write per second, of up to 1 KB. So, with 5 WCUs, you can perform 1 write operation per second of 4.5 KB, or 5 writes of less than 1 KB.

Remember that all operations inside a transaction consume twice the capacity, because DynamoDB uses two-phase commit for transactions. Every node has to simulate the operation and then actually perform it, so it's twice the work.

Also remember that local secondary indexes (LSIs) make each write consume additional capacity: 1 extra write operation for puts or deletes, 2 extra operations per update, with actual WCUs depending on how much data is written on the index (not the base table). Reads on an LSI that query for attributes that aren't projected on the LSI also consume additional read capacity: 1 additional read operation (RCUs for it depend on consistency and size of the data read) for each item that is read from the base table.

If you exceed the capacity (e.g. you have 5 RCUs and in one second you try to do 6 strongly consistent reads), you receive a ProvisionedThroughputExceededException. Your code should catch this and retry. DynamoDB doesn't overload from this, it'll just keep accepting operations up to your capacity and reject the rest (this is called load shedding btw). The AWS SDK already implements retries with exponential backoff, and you can tune the parameters. You can also use SQS to throttle DynamoDB operations.

Tokens, Burst Capacity and Adaptive Capacity

Under the hood, DynamoDB splits the data across several partitions, and capacity is split evenly across those partitions. So, if you set 30 RCUs for a table and it has 3 partitions, each partition gets 10 RCUs. Each partition has a "token bucket", which refills at a rate of 1 token per second per RCU (so 10 tokens per second in this case). Each read (strongly consistent, up to 4 KB) consumes 1 token, and if there are no more tokens, you get a ProvisionedThroughputExceededException.

There's two separate buckets, one for reads and one for writes. They both work exactly the same, the only difference is the operations that consume those tokens, and the size of the data (4 KB for reads, 1 KB for writes). I'll talk about RCUs, but the same is true for WCUs.

The tokens bucket has a maximum capacity of 300 * RCUs. For our example of 10 RCUs per partition (remember that each partition has its own bucket), it has a maximum capacity of 3000 tokens, refilling at 10 tokens per second. That means, with no operations going on, it takes 5 minutes to fill up to capacity.

If there's a sudden spike in traffic, these extra tokens that have been piling up will be used to execute those operations, effectively increasing the partition's capacity temporarily. For example, if every user performs 1 strongly consistent read per second on this partition, your RCUs of 10 per partition would serve 10 users. Suppose you don't have users for 5 minutes, the bucket fills up. Then, 20 users come in all of a sudden, making 20 reads per second on this partition. Thanks to those stored tokens, the partition can sustain those 20 reads per second for 5 minutes, even though the partition's RCUs are 10. AWS doesn't handle huge spikes instantaneously (e.g. it won't serve 3000 reads in a second, even if you do have 3000 tokens), but it scales this over a few seconds. This is called Burst Capacity, and it's completely separate from Auto Scaling (and will happen even with Auto Scaling disabled).

Another thing that happens with uneven load on partitions is Adaptive Capacity. RCUs are split evenly across partitions, so each of our 3 partitions will have 10 RCUs. If partition 1 is the one getting these 20 users, and the others are getting 0, then AWS can assign part of those 20 spare RCUs you have (remember that we set 30 RCUs on the table) to the partition that's handling that load. The maximum RCUs a partition can get is 1.5x the RCUs it normally gets, so in this case it would get 15 RCUs. That means 5 of our 20 spare RCUs are assigned to that partition, and the other 15 are unused capacity. That would let our partition handle those 20 users for 10 minutes instead of 5 (assuming a full token bucket). This also happens separately from Auto Scaling.

Burst Capacity doesn't effectively change RCUs, but it can make our table temporarily behave as if it had more RCUs than it really does, thanks to those stored tokens (very similar to how CPU credits work for EC2 burstable instances). It's great for performance, but I wouldn't count it as scaling (in case you forgot, we're talking about scaling DynamoDB).

Adaptive Capacity can actually increase RCUs beyond what's set for the table. If all partitions are getting requests throttled, Adaptive Capacity will increase their RCUs up to the 1.5 multiplier, even if this puts the total RCUs of the table above the value you set. This will only last for a few seconds, after which it goes back to the normal RCUs, and to throttling requests. I guess that technically counts as scaling the table's RCUs? Yeah, I'll count that as a win for me. Let's get to the real scaling though.

Scaling in Provisioned Mode

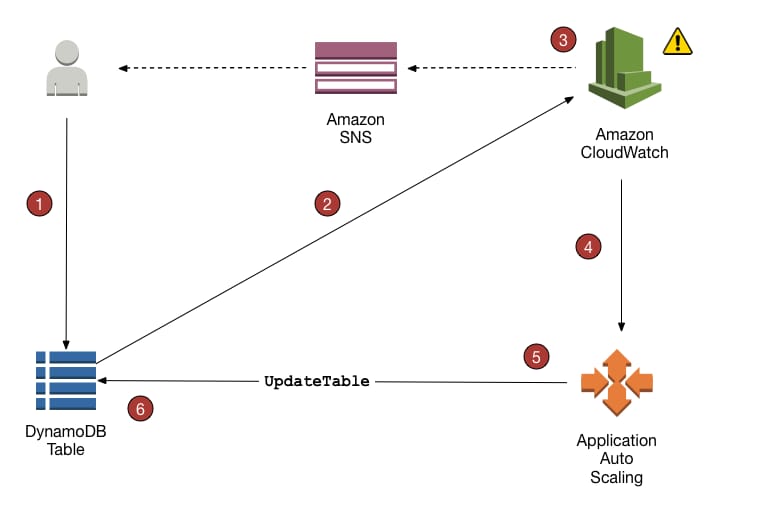

This is the real scaling. DynamoDB tables continuously send metrics to CloudWatch, CloudWatch triggers alarms when those metrics cross a certain threshold, DynamoDB gets notified about that and modifies Capacity Units accordingly.

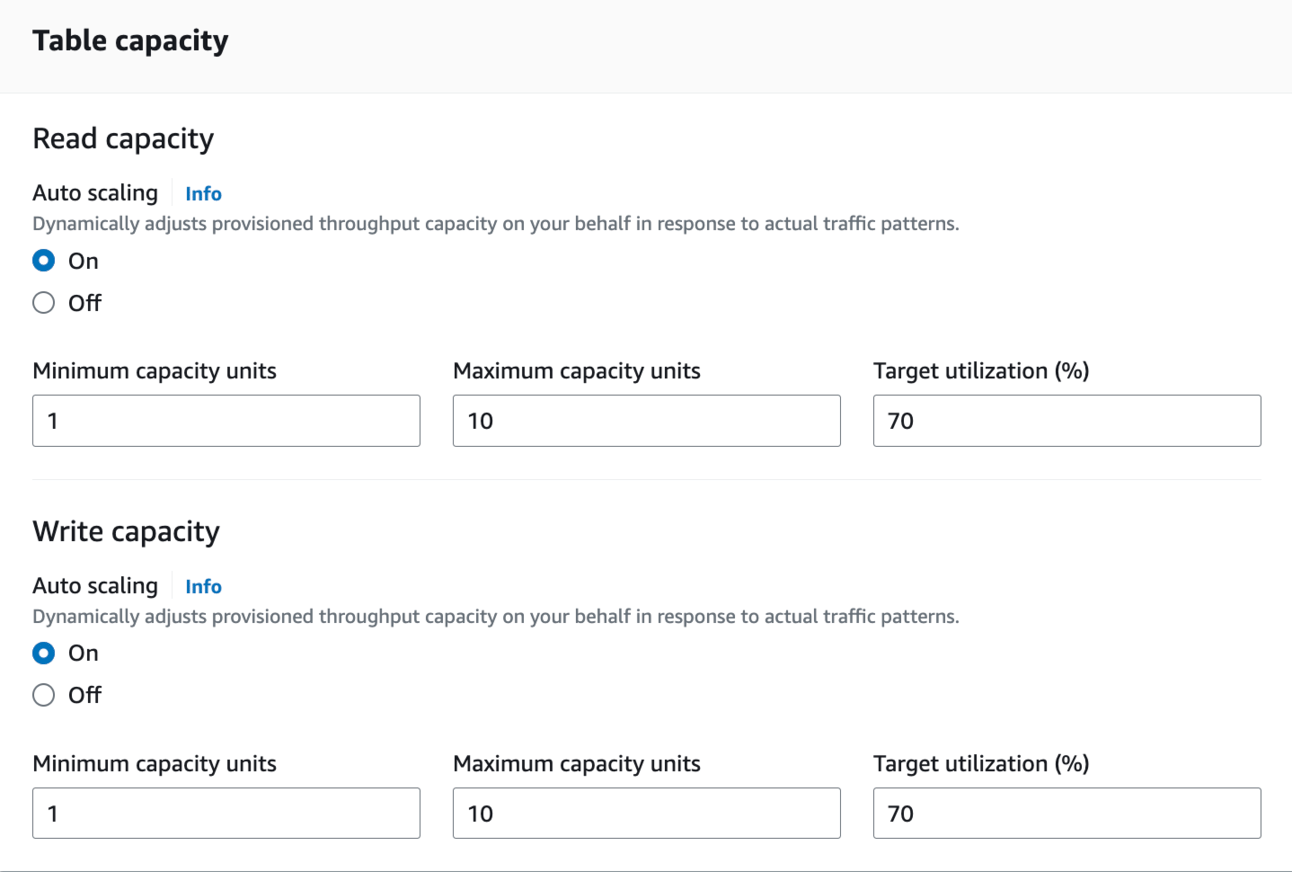

On DynamoDB you enable Auto Scaling, set a minimum and maximum capacity units, and set a target utilization (%). You can enable scaling separately for Reads and Writes.

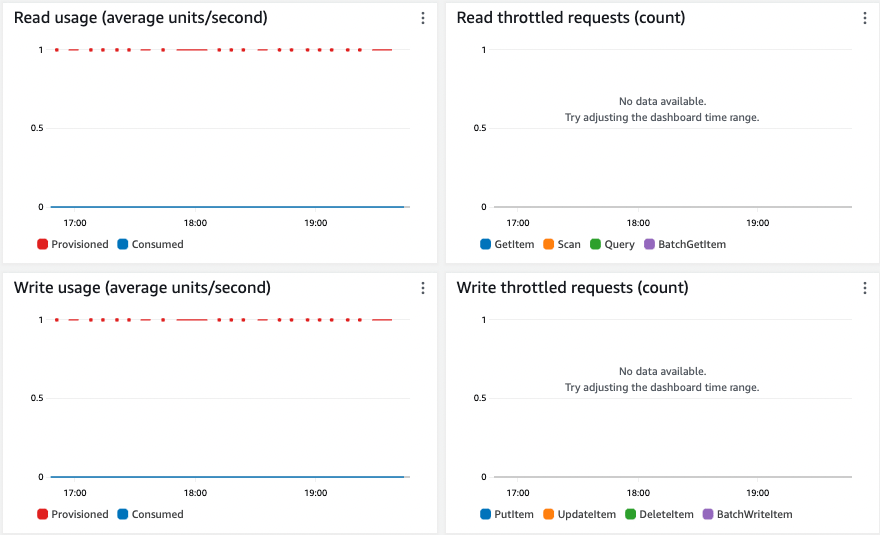

In the table metrics (handled by CloudWatch) you can view provisioned and consumed capacity, and throttled request count.

Here's the problem though: Auto Scaling is based on CloudWatch Alarms that trigger when the metric is above/below the threshold in at least 3 data points for 5 minutes. So, not only Auto Scaling doesn't respond fast enough for sudden spikes, it doesn't respond at all if the spikes last less than 5 minutes. That's why the default threshold is 70%, and allowed values are between 20% and 90%: You need to leave some margin for traffic to continue growing while Auto Scaling takes it sweet time to figure out it should scale.

Luckily, we have Bust Capacity and Adaptive Capacity to deal with those infrequent spikes, and retries can help you eventually serve the requests that were initially throttled. You probably can't retry your way into Auto Scaling (imagine waiting 5 minutes for a request…), but retries can give Burst Capacity the few seconds it needs to kick in. Adaptive Capacity adjusts slower, and it's intended to fix uneven traffic across partitions, so don't count on it.

Now, this is all looking a lot like EC2 instances in an Auto Scaling Group, right? And we're seeing the same problems: We need to keep some extra capacity provisioned, as a buffer for traffic spikes. Even then, if a spike is big and fast enough, we can't respond to it! (except for some bursting). Why do we have these problems, if DynamoDB is supposed to be serverless? Well, it is serverless: you don't manage servers, but they're still there. What did you expect, magic? Well, sufficiently advanced science is indistinguishable from magic. Let's see if DynamoDB's other mode is close enough to serverless magic.

DynamoDB On-Demand Mode

Welcome to the real serverless mode of DynamoDB! With On-Demand mode, you don't need to worry about scaling your DynamoDB table, it happens automatically. Wait, you really believed that? Of course you need to worry! But it's much simpler to understand and manage, and it results in less throttles.

Capacity in On-Demand Mode

The cost of reads and writes stays the same: a read operation consumes 1 Read Request Unit (RRU) for every 4 KB read (half if it's eventually consistent), and a write operation consumes 1 WRU (Write Request Unit) for every 1 KB written. Twice for transactions, LSIs increase it, yadda yadda. Same as for Provisioned, we just changed Capacity Units for Request Units.

Here's the difference: There is no capacity you can set. You're billed for every actual operation, and DynamoDB manages capacity automatically and transparently. However, it does have a set capacity, it does scale, and understanding how it does is important.

Scaling in On-Demand Mode

Every newly-created table in On-Demand mode starts with 4.000 WCUs and 12.000 RCUs (yeah, that's a lot). You're not billed for those capacity units though, you'll only be billed for actual operations.

Every time your peak usage goes over 50% of the current assigned capacity, DynamoDB increases the capacity of that table to double your peak. So, suppose you used 5.000 WRUs, now your table's WCUs are 10.000. This growth has a cooldown period of 30 minutes, meaning it won't happen again until 30 minutes after the last increase.

This isn't documented anywhere, and I haven't managed to get official confirmation, but apparently capacity for On-Demand tables doesn't decrease, ever. This seems consistent with how DynamoDB works under the hood: Partitions are split in two and assigned to new nodes, with each node having a certain maximum capacity of 3000 RCUs and 1000 WCUs. Apparently partitions are never re-combined, so there's no reason to think capacity for On-Demand tables would decrease. Again, this isn't published anywhere, just a common assumption.

Switching from Provisioned Mode to On-Demand Mode

You can switch modes in either direction, but you can only do so once every 24 hours. If you switch from Provisioned Mode to On-Demand mode, the table's initial RCUs are the maximum of 12.000, your current RCUs, or double the units of the highest peak. Same for WCUs, the maximum between 4.000, your current WCUs, or double the units of the highest peak.

If you switch from On-Demand mode to Provisioned Mode, you need to set up your capacity or auto scaling manually.

In either case the switch takes up to 30 minutes, during which the table continues to function like before the switch.

Provisioned vs On-Demand - Pricing Comparison

In Provisioned Mode, like with anything provisioned, you're billed per provisioned capacity, regardless of how much you actually consume. The price is $0.00065/hour per WCU, and $0.00013/hour per RCU.

In On-Demand Mode you're only billed for Request Units (which is basically Capacity Units that were actually consumed). The price is $1.25 per million WRUs and $0.25 per million RRUs.

Let's consider some scenarios. Assume all reads are strongly consistent and read 4 KB of data, and all writes are outside transactions and for 1 KB of data. Also, there are no secondary indexes. Suppose you have the following traffic pattern:

Between 2000 and 3000 (average 2500) reads per second during 8 hours of the day (business hours).

Between 400 and 600 (average 500) reads per second during 16 hours of the day (off hours).

Between 200 and 300 (average 250) writes per second during 8 hours of the day (business hours).

Between 50 and 150 (average 100) writes per second during 16 hours of the day (off hours).

With Provisioned Mode, no Auto Scaling, we'll need to set 3000 RCUs and 300 WCUs. The price would be $0,39 per hour for reads and $0,195 per hour for writes, for a total of $280,80 + $140,40 = $421,20 per month.

With Provisioned Mode, Auto Scaling set for a minimum 400 and a maximum 3000 RCUs and minimum 50 and maximum 300 WCUs, we'll get the following:

For business hours: We'll use our average 2500 reads, so we get $0,325 per hour for reads, and $78/month for reads for business hours. For writes, using our average of 250, we get $0,1625/hour and $39/month.

For off hours: Using the average values, $0,065/hour and $31,20/month for reads, and $0,065/hour and $31,20/month for writes,

In total, we get $179,40 per month.

With On-Demand Mode, we'll just use the averages. With 2500 reads per second we have 9.000.000 reads per hour on business hours, which costs us $2,25/hour, or $540/month. We have an average 250 writes per second, so 900.000 writes per hour, which costs $1,125/hour or $270/month.

On off hours we have 1.800.000 reads per hour, for $0,45/hour and $216/month. Writes are 360.000/hour, at $0,45/hour and $216/month.

Our grand total is $540 + $270 + $216 + $216 = $1.242/month.

Note: These prices are only for reads and writes. Storage is priced separately, and so are other features like backups.

Best Practices for Scaling DynamoDB

Operational Excellence

Monitor Throttling Metrics: Keep an eye on the ReadThrottleEvents and WriteThrottleEvents metrics in CloudWatch. Compare them with your app's latency metrics, to determine how much this is impacting your app.

Audit Tables Regularly: Review your DynamoDB tables to make sure that they're performing and scaling well. This includes reviewing capacity settings, and reviewing indices and keys.

Security

Enable Point-In-Time Recovery: Data corruption doesn't happen, until it happens. Enabling Point-In-Time Recovery allows you to restore a table to a specific state if needed.

Reliability

Pre-Warm On-Demand Tables: When expecting a big increase in traffic (like a product launch), if you're using an On-Demand table, make sure to pre-warm it. You can do this by switching it to Provisioned Mode, setting its capacity to a large number and keeping it there for a few minutes, and then switching it back to On-Demand so the On-Demand capacity matches the capacity the table had in Provisioned Mode. Remember that you can only switch once every 24 hours.

Performance Efficiency

Use Auto-Scaling: This one's quite obvious, I hope. But the point is that there's no reason not to use this. Sure, sometimes it isn't fast enough, but in those cases Provisioned Mode without Auto Scaling won't work well either.

Choose the Right Partition Key: Remember what I said about capacity units being split across partitions? Well, if you pick a PK that doesn't distribute traffic uniformly (or as uniformly as possible), you're going to have a problem called hot partition. This is part of DynamoDB Database Design, but as you saw, it affects performance and scaling.

Cost Optimization

Pick The Right Mode: You saw the numbers in the example. On-Demand will very rarely result in throttling, but it is expensive. Only use it for traffic that spikes in less than the 5 minutes that Provisioned Mode with Auto Scaling takes to scale.

Monitor and Adjust Provisioned Capacity: Regularly review your capacity settings and adjust them. Traffic patterns change over time!

Use Reserved Capacity: If you have a consistent and predictable workload (like in the pricing example), consider purchasing reserved capacity for DynamoDB. It works similar to Reserved Instances: You reserve it and commit to a year or 3, for a lower price.

At re:Invent 2018 there was a talk titled Amazon DynamoDB Under the Hood: How We Built a Hyper-Scale Database. It was 5 years ago, but a lot of the things said there are still true. It's less than 1 hour long, and really interesting.

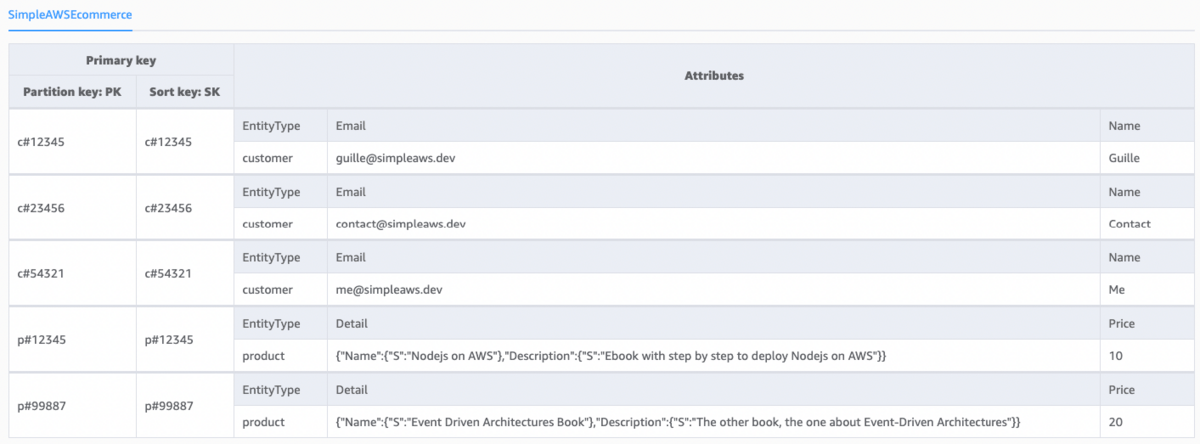

I still think that DynamoDB Database Design is one of the best articles I've written for Simple AWS. I even presented a talk based on it at a meetup of the AWS User Group Córdoba. Here's the link:

AWS created some pretty interesting workshops for DynamoDB: