You have this cool app you wrote in Node.js. You're a great developer, but you started out with 0 knowledge of AWS. At first you just launched an EC2 instance, SSH'd there and deployed the app. Then you subscribed to Simple AWS and you read the article about a self-healing, single-instance environment, so you applied that. It's better, but it doesn't scale. You found my old post about ECS, you understood the basic concepts, but you don't know how to go from your app on EC2 to your app on an ECS cluster.

We're going to use the following AWS services:

ECS: A container orchestration service that helps manage Docker containers on a cluster.

Elastic Container Registry (ECR): A managed container registry for storing, managing, and deploying Docker images.

Fargate: A serverless compute engine for containers that eliminates the need to manage the underlying EC2 instances.

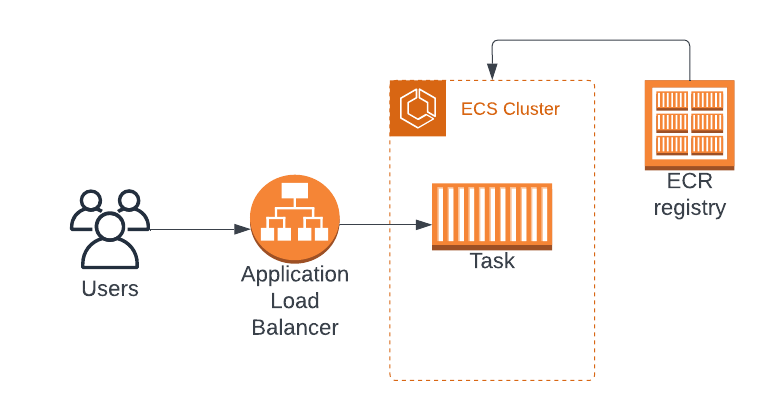

ECS example with one task

Solution step by step

Install Docker on your local machine.

Follow the instructions from the official Docker website:Create a Dockerfile.

In your app's root directory, create a file named "Dockerfile" (no file extension). Use the following as a starting point, adjust as needed.

# Use the official Node.js image as the base image

FROM node:latest

# Set the working directory for the app

WORKDIR /app

# Copy package.json and package-lock.json into the container

COPY package*.json ./

# Install the app's dependencies

RUN npm ci

# Copy the app's source code into the container

COPY . .

# Expose the port your app listens on

EXPOSE 3000

# Start the app

CMD ["npm", "start"]Build the Docker image and test it locally.

While in your app's root directory, build the Docker image. Once the build is complete, start a local container using the new image. Test the app in your browser or withcurlor Postman to ensure it's working correctly. If it's not, go back and fix the Dockerfile.

docker build -t cool-nodejs-app .

docker run -p 3000:3000 cool-nodejs-appCreate the ECR registry.

First, create a new ECR repository using the AWS Management Console or the AWS CLI.

aws ecr create-repository --repository-name cool-nodejs-appPush the Docker image to ECR.

The command that creates the ECR registry will output the instructions in the output to authenticate Docker with your ECR repository. Follow them. Then, tag and push the image to ECR, replacing{AWSAccountId}and{AWSRegion}with the appropriate values:

docker tag cool-nodejs-app:latest {AWSAccountId}.dkr.ecr.{AWSRegion}.amazonaws.com/cool-nodejs-app:latest

docker push {AWSAccountId}.dkr.ecr.{AWSRegion}.amazonaws.com/cool-nodejs-app:latestCreate an ECS Task Definition.

We'll do this with CloudFormation. Create a file named "ecs-task-definition.yaml" with the following contents. Then, in the AWS Console, create a new CloudFormation stack using the "ecs-task-definition.yaml" file as the template.

Resources:

ECSTaskRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal: !Sub ${AWS::AccountId}

Service:

- ecs-tasks.amazonaws.com

Action:

- sts:AssumeRole

Policies:

- PolicyName: ECRReadOnlyAccess

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- ecr:GetAuthorizationToken

Resource: "*"

- Effect: Allow

Action:

- ecr:BatchCheckLayerAvailability

- ecr:GetDownloadUrlForLayer

- ecr:GetRepositoryPolicy

- ecr:DescribeRepositories

- ecr:ListImages

- ecr:DescribeImages

- ecr:BatchGetImage

Resource: !Sub "arn:aws:ecr:${AWS::Region}:${AWS::AccountId}:repository/cool-nodejs-app"

CoolNodejsAppTaskDefinition:

Type: AWS::ECS::TaskDefinition

Properties:

Family: cool-nodejs-app

TaskRoleArn: !Ref ECSTaskRole

ExecutionRoleArn: !Ref ECSTaskRole

RequiresCompatibilities:

- FARGATE

NetworkMode: awsvpc

Cpu: '256'

Memory: '512'

ExecutionRoleArn: !Ref TaskExecutionRole

ContainerDefinitions:

- Name: cool-nodejs-app

Image: !Sub "arn:aws:ecr:${AWS::Region}:${AWS::AccountId}:repository/cool-nodejs-app:latest"

PortMappings:

- ContainerPort: 3000

LogConfiguration:

LogDriver: awslogs

Options:

awslogs-group: !Ref CloudWatchLogsGroup

awslogs-region: !Sub ${AWS::Region}

awslogs-stream-prefix: ecsCreate the ECS Cluster and Service.

You'll need an existing VPC for this (you can use the default one). Update your CloudFormation template ("ecs-task-definition.yaml") to include the ECS Cluster, the Service, and the necessary resources for networking and load balancing. Replace{VPCID}with the ID of your VPC, and{SubnetIDs}with one or more subnet IDs. Then, on the Console, go to CloudFormation and update the existing stack with the modified template.

Resources:

# ... (Existing Task Definition and related resources)

CoolNodejsAppService:

Type: AWS::ECS::Service

Properties:

ServiceName: cool-nodejs-app-service

Cluster: !Ref CoolNodejsAppCluster

TaskDefinition: !Ref CoolNodejsAppTaskDefinition

DesiredCount: 2

LaunchType: FARGATE

NetworkConfiguration:

AwsvpcConfiguration:

AssignPublicIp: ENABLED

Subnets:

- {SubnetIDs}

LoadBalancers:

- TargetGroupArn: !Ref AppTargetGroup

ContainerName: cool-nodejs-app

ContainerPort: 3000

CoolNodejsAppCluster:

Type: AWS::ECS::Cluster

Properties:

ClusterName: cool-nodejs-app-cluster

AppLoadBalancer:

Type: AWS::ElasticLoadBalancingV2::LoadBalancer

Properties:

Name: app-load-balancer

Scheme: internet-facing

Type: application

IpAddressType: ipv4

LoadBalancerAttributes:

- Key: idle_timeout.timeout_seconds

Value: '60'

Subnets:

-

SecurityGroups:

- !Ref AppLoadBalancerSecurityGroup

AppLoadBalancerSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupName: app-load-balancer-security-group

VpcId: {VPCID}

GroupDescription: Security group for the app load balancer

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 80

ToPort: 80

CidrIp: 0.0.0.0/0

AppTargetGroup:

Type: AWS::ElasticLoadBalancingV2::TargetGroup

Properties:

Name: app-target-group

Port: 3000

Protocol: HTTP

TargetType: ip

VpcId: {VPCID}

HealthCheckEnabled: true

HealthCheckIntervalSeconds: 30

HealthCheckPath: /healthcheck

HealthCheckTimeoutSeconds: 5

HealthyThresholdCount: 2

UnhealthyThresholdCount: 2Set up auto-scaling for the ECS Service.

Add the following resources to the CloudFormation template to set up auto-scaling policies based on CPU utilization, and update the CloudFormation stack with the modified template.

Resources:

# ... (Existing resources)

AppScalingTarget:

Type: AWS::ApplicationAutoScaling::ScalableTarget

Properties:

MaxCapacity: 10

MinCapacity: 2

ResourceId: !Sub "service/${CoolNodejsAppCluster}/${CoolNodejsAppService}"

RoleARN: !Sub "arn:aws:iam::${AWS::AccountId}:role/aws-service-role/ecs.application-autoscaling.amazonaws.com/AWSServiceRoleForApplicationAutoScaling_ECSService"

ScalableDimension: ecs:service:DesiredCount

ServiceNamespace: ecs

AppScalingPolicy:

Type: AWS::ApplicationAutoScaling::ScalingPolicy

Properties:

PolicyName: app-cpu-scaling-policy

PolicyType: TargetTrackingScaling

ScalingTargetId: !Ref AppScalingTarget

TargetTrackingScalingPolicyConfiguration:

PredefinedMetricSpecification:

PredefinedMetricType: ECSServiceAverageCPUUtilization

TargetValue: 50

ScaleInCooldown: 300

ScaleOutCooldown: 300Test the new app.

After the CloudFormation stack update is complete, go to the ECS console, click on thecool-nodejs-app-clustercluster, and you'll find thecool-nodejs-app-serviceservice. The service will launch tasks based on your task definition and desired count, so you should see 2 tasks. To test the app, go to the EC2 console, look for the Load Balancers option on the left, click on the load balancer namedapp-load-balancerand find the DNS name. Paste the name in your browser or usecurlor Postman. If we got it right, you should see the same output as when running the app locally. Congrats!

Solution explanation

Install Docker: ECS runs containers. Docker is the tool we use to containerize our app. Containers package an app and its dependencies together, ensuring consistent environments across different stages and platforms. We needed this either way for ECS, but we get the extra benefit of being able to run it locally or on ECS without any extra effort.

Create a Dockerfile: A Dockerfile is a script that tells Docker how to build a Docker image. We specified the base image, copied our app's files, installed dependencies, exposed the app's port, and defined the command to start the app. Writing this when starting development is pretty easy, but doing it for an existing app is harder.

Build the Docker image and test it locally: The degree to which you know how your software behaves is the degree to which you've tested it. So, we wrote the Dockerfile, built the image, and tested it!

Create the ECR registry: Amazon Elastic Container Registry (ECR) is a managed container registry that stores Docker container images. It's an artifacts repo, where our artifacts are Docker images. ECR integrates seamlessly with ECS, allowing us to pull images directly from there without much hassle.

Push the Docker image to ECR: We're building our Docker image and pushing it to the registry so we can pull it from there.

Create an ECS Task Definition: A Task Definition describes what Docker image the tasks should use, how much CPU and memory a task should have, and other configs. They're basically task blueprints. We built ours using CloudFormation, because it makes it more maintainable (and because it's easier to give you a template than to show you Console screenshots). By the way, we also included permissions for the task definition to pull images from the ECR registry.

Create the ECS cluster and service: The cluster contains all services and tasks. A Task is an instance of our app, basically a Docker container plus some ECS wrapping and configs. Instead of launching tasks manually, we'll create a Service, which is a grouping of identical tasks. A Service exposes a single endpoint for the app (using an Application Load Balancer in this case), and controls the tasks that are behind that endpoint (including launching the necessary tasks).

Set up auto-scaling for the ECS Service: Before this step our ECS Service has 2 tasks, and that's it, no auto scaling of any kind. Here's where we set the policies that tell the ECS Service when to create more tasks or destroy existing tasks. We'll do this based on CPU Utilization, which is the best parameter in most cases (and the easiest to set up).

Test the new app: Alright, now we've got everything deployed. Time to test it! Find the DNS name of the Load Balancer that corresponds to our Service, paste that in your browser or

curlor Postman, and take it for a test drive. If it works, give yourself a pat on the back! If it doesn't, shoot me an email.

Discussion

We did it! Took a single EC2 instance that wouldn't scale, and made it scalable, reliable (if a task fails, ECS launches a new one) and highly available (if you chose at least 2 subnets in different AZs). And it wasn't that hard.

Real life could be harder though. This solution works under the assumption that the app stores no state in the same instance. Any session data (or any data that needs to be shared across instances, no matter how temporary or persistent it is) should be stored in a separate storage, such as a database. DynamoDB is great for session data, even if you use RDS/Aurora for the rest of the data.

Most of the people I've seen using a single EC2 instance and a relational database have the database running in the same instance. You should move it to RDS/Aurora. This is a separate step from moving the app to ECS, we can do an article on it in the future.

Local environments are easy, until you have 3 devs using an old Mac, 2 using an M1 or M2 Mac, 2 on Windows and a lone guy running an obscure Linux distro (it's the same guy who argues vi is better than VS Code). Docker fixes that.

Yes, I used Fargate. Did I cheat? Maybe… I figured you already knew about Auto Scaling Groups, from the self-healing instance article. I went with Fargate so we could focus on ECS, but if you'd like to see this on an EC2 Auto Scaling Group, hit Reply and let me know!

Why ECS and not a plain EC2 Auto Scaling Group? For one service, it's pretty much the same effort. For multiple services, ECS abstracts away a LOT of complexities.

Why ECS and not Kubernetes? We covered Kubernetes already, though not this particular process. And I discussed a bit why I prefer ECS (tl;dr: it's simpler). Can I write an article on how to kubernetize (I made up that term) an app? Sure! Do you want me to?

Best Practices

Operational Excellence

Use a CI/CD pipeline: I ran all of this manually, but you should add a pipeline. After you've created the infrastructure, all the pipeline needs to do is build the docker image with

docker build, tag it withdocker tagand push it withdocker push.Use an IAM Role for the pipeline: Of course you don't want to let anyone write to your ECR registry. The CI/CD pipeline will need to authenticate. You can either do this with long-lived credentials (not great but it works), or by letting the pipeline assume an IAM Role. The details depend on the tool you use, but try to do it.

Health Checks: Configure health checks for your ECS service and Application Load Balancer to ensure that only healthy tasks are receiving traffic.

Use Infrastructure as Code: With this article you're already halfway there! You've got your ECS cluster, task definition and service done in CloudFormation!

Implement Log Aggregation: Set up log aggregation for your ECS tasks using CloudWatch Logs, Elasticsearch or whatever tool you prefer. All tasks are the same, logs across tasks should be aggregated.

Use blue/green deployments: Blue/green is a strategy that consists of deploying the new version in parallel with the old one, testing it, routing traffic to it, monitoring it, and when you're sure it's working as intended, only then shut down the old version.

Security

Store secrets in Secrets Manager: Database credentials, API keys, other sensitive data? Secrets manager.

Task IAM Role: Assign an IAM role to each ECS task (do it at the Task Definition), so it has permissions to interact with other AWS services. We actually did this in our solution, so the tasks could access ECR!

Enable Network Isolation: I told you to use the default VPC for now. For a real use case you should use a dedicated VPC (they're free!), and put tasks in private subnets.

Use Security Groups and NACLs: In a previous article about AWS Lambda functions with access to a VPC I mentioned defense in depth a lot. Basically, protect yourself at multiple levels, including network.

Regularly Rotate Secrets: Secrets Manager reduces the chances of a secret being compromised. But what if it is, and you don't find out? Rotate passwords and all secrets regularly.

Reliability

Multi-AZ Deployment: Pick multiple subnets in different AZs and ECS will deploy your tasks in a highly-available manner. Remember that for AWS “highly available” means it can quickly and automatically recover from the failure of one AZ.

Use Connection Draining: At some point you'll want to kill a task, but it'll probably be serving requests. Connection draining tells the LB to not send new requests to that task, but to wait a few seconds (configurable, use 300) to kill it. That way, the task can finish processing those requests and the users aren't impacted.

Set Up Automatic Task Retries: Configure automatic retries for tasks that fail due to transient errors. This way your tasks can recover from temporary errors automatically.

Performance Efficiency

Optimize Task Sizing: Adjust CPU and memory allocations to match your app requirements. Fixing bad performance by throwing money at it sounds bad, but some processes and languages are inherently resource-intensive.

Use Container Insights: Enable Container Insights in CloudWatch to monitor, troubleshoot, and optimize ECS tasks. This gives you valuable insights into the performance of your containers and helps you identify potential bottlenecks or areas for optimization.

Use a queue for writes: Before, neither your app nor your database scaled. Now, your app scales really well, but your database still doesn't scale. A sudden surge of users no longer brings down your app layer, but the consequent surge of write requests can bring down the database. To protect from this, add all writes to a queue and have another service consume from the queue at a max rate.

Use Caching: If you're accessing the same data many times, you can probably cache it. This will also protect your database from bursts of reads.

Optimize Auto-Scaling Policies: Review and adjust scaling policies regularly, so your services aren't scaling out too late and scaling in too early.

Cost Optimization

Configure a Savings Plan: Get a Savings Plan for Fargate.

Optimize Auto-Scaling Policies: Review and adjust scaling policies regularly, so your services aren't scaling out too early and scaling in too late. Yeah, this is the reverse of the one for Performance Efficiency. Bottom line is you need to find the sweet spot.

Right-size ECS Tasks: You threw money at the performance problems, and it works better now. Optimize the code when you can, and right-size again (lower resources this time) to get that money back. As with auto scaling, find the sweet spot.

Clean your ECR registries regularly: ECR charges you per GB stored. We usually push and push and push images, and never delete them. You won't need images from 3 months ago (and if you do you can always rebuild them!).

Use EC2 Auto Scaling Groups: Like I mentioned in the discussion section, I went with Fargate so we could focus on ECS without getting distracted by auto scaling the instances. Fargate is pretty cheap, easy to use, 0 maintenance, and scales fast. An auto-scaling group of EC2 instances is a bit harder to use (not that much), requires maintenance efforts, doesn't scale as fast, but it is significantly cheaper. Pick the right one. The good news is that it's not hard to migrate, so I'd recommend Fargate for all new apps.