For this article, we'll continue working with the online learning platform that we've been building in our previous posts on microservices design and microservices security. You don't need to go back and read those articles, I'll give you all the context you need.

We have three microservices: Course Catalog, Content Delivery, and Progress Tracking. The Content Delivery service is responsible for providing access to course materials such as videos, quizzes, and assignments. These files are stored in Amazon S3, but they are currently publicly accessible. We need to secure access to these files so that only authenticated users of our app can access them.

We also have an S3 bucket called simple-aws-courses-content where we store the videos of the course. The bucket and all objects are public right now (this is what we're going to fix in this article).

We're also exposing our microservices via an API Gateway. Authentication is handled by Amazon Cognito, which stores the users, gives us sign up and sign in functionality, and works as an authorizer for API Gateway. We're going to use it as an authorizer for our content as well.

With our current architecture, when the user clicks View Content, our frontend sends a request to the Content Delivery endpoint in API Gateway with the authentication data, API Gateway calls the Cognito authorizer, Cognito approves that request, API Gateway forwards the request to the Content Delivery microservice, and the Content Delivery microservice reads the S3 URL of the requested video from the DynamoDB table and returns that URL.

The problem that we have is that the S3 bucket is public, so while a user needs to be logged in to get that URL, once they have the URL they can share it with anyone, and those other people can access our paid content without paying. What we want is for Cognito to also authenticate and authorize the request that goes to Amazon S3.

Securing Access to S3 with Amazon Cognito

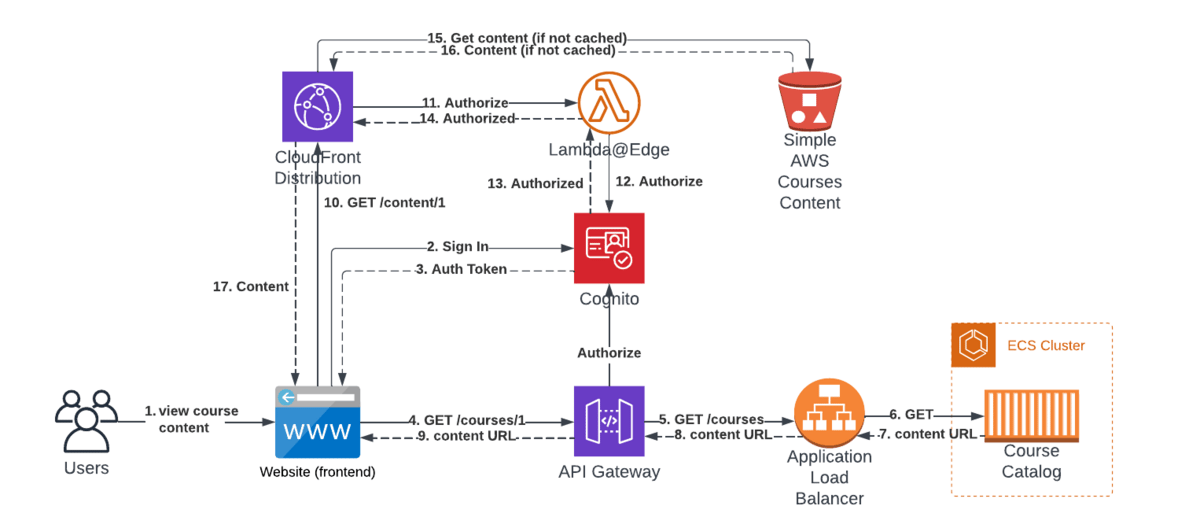

This is how our architecture will look like, after we've fixed the problem.

Complete flow for getting the content

We'll be adding a CloudFront Distribution, which will execute a Lambda@Edge function on every request. The caching aspect of CloudFront is also desirable in this particular case, but remember that you can disable it if you prefer not to cache your content.

Step 1: Update the IAM permissions for the Content Delivery microservice

In our current solution the Content Delivery microservice only had access to what it needed, and not more. Now it needs more access, so first of all we're adding permissions so it can access the (not-created-yet) CloudFront distribution to generate the pre-signed URLs.

Go to the IAM console.

Find the IAM role associated with the Content Delivery microservice (you might need to head over to ECS if you don't remember the name).

Click "Attach policies" and then "Create policy".

Add the content below, to grant permissions to read all objects.

Name the policy something like "SimpleAWSCoursesContentAccess", add a description, and click "Create policy".

Attach the new policy to the IAM role.

Here's the content of the policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"cloudfront:ListPublicKeys",

"cloudfront:GetPublicKey"

],

"Resource": "*"

}

]

}If you're not sure what this policy does, or how IAM permissions work, read my IAM Permissions explanation.

Step 2: Set up a CloudFront distribution

Amazon CloudFront is a CDN, which means it caches content near the user.

Without CloudFront: User -----------public internet-----------> S3 bucket.

With CloudFront: User ---public internet---> CloudFront edge location.

See? It's a shorter path! That's because CloudFront has multiple edge locations all over the world, and the request goes to the one nearest the user. That reduces latency for the user.

As I mentioned, we don't exactly need the caching behavior of CloudFront. What we're really interested in is its ability to run Lambda functions on every request, which we'll see in a bit. For now, let's configure our CloudFront Distribution.

Open the CloudFront console.

Choose Create distribution.

Under Origin, for Origin domain, choose the S3 bucket

simple-aws-courses-content.Use all the default values.

At the bottom of the page, choose Create distribution.

After CloudFront creates the distribution, the value of the Status column for the distribution changes from In Progress to Deployed. This typically takes a few minutes.

Write down the domain name that CloudFront assigns to your distribution. It looks something like

d111111abcdef8.cloudfront.net.

Step 3: Make the S3 bucket private

With the changes we're making, users won't need to access the S3 bucket directly. We want everything to go through CloudFront, so we'll remove public access to the bucket.

Go to the S3 console.

Find the "simple-aws-courses-content" bucket and click on it.

Click on the "Permissions" tab and then on "Block public access".

Turn on the "Block all public access" setting and click "Save".

Remove any existing bucket policies that grant public access.

Add this bucket policy to only allow access from the CloudFront distribution (replace

ACCOUNT_IDwith your Account ID andDISTRIBUTION_IDwith your CloudFront distribution ID):

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "cloudfront.amazonaws.com"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::simple-aws-courses-content/*",

"Condition": {

"StringEquals": {

"aws:SourceArn": "arn:aws:cloudfront::ACCOUNT_ID:distribution/DISTRIBUTION_ID"

}

}

}

]

}You might have noticed that we didn't just remove all access to the bucket. Instead, we allowed access only from the CloudFront distribution. This, combined with the next step, will allow our CloudFront distribution to access the bucket.

Step 4: Create the CloudFront Origin Access Control

In the previous step we restricted access to the S3 bucket. Here we're giving our CloudFront distribution a sort of “identity” that it can use to access the S3 bucket. This way, the S3 service can identify the CloudFront distribution that's trying to access the bucket, and allow the operation.

Go to the CloudFront console.

In the navigation pane, choose Origin access.

Choose Create control setting.

On the Create control setting form, do the following:

In the Details pane, enter a Name and a Description for the origin access control.

In the Settings pane, leave the default setting Sign requests (recommended).

Choose S3 from the Origin type dropdown.

Click Create.

After the OAC is created, write down the Name. You'll need this for the following step.

Go back to the CloudFront console.

Choose the distribution that you created earlier, then choose the Origins tab.

Select the S3 origin and click Edit.

From the Origin access control dropdown menu, choose the OAC that you just created.

Click Save changes.

Step 5: Create a Lambda@Edge function for authorization

If you read the Securing Microservices article, or if you've worked with API Gateway and Cognito, you'll be thinking that we can solve the Cognito authorization with a Cognito Authorizer. That would be ideal! And if you're using Cognito with API Gateway and don't know what a Cognito Authorizer is, I highly suggest you read the securing microservices article.

Unfortunately, when using CloudFront we don't have such a simple solution. Instead, we're going to write a Lambda function that checks with Cognito whether the auth headers included in the request belong to an authenticated and authorized user.

Go to the Lambda console, click "Create function" and choose "Author from scratch".

Provide a name for the function, such as "CognitoAuthorizationLambda".

Choose the Node.js runtime.

In "Function code", put the code below. You'll need the packages

npm install jsonwebtoken jwk-to-pem, and you'll need to replaceREGION,USER_POOL_IDandUSER_GROUPwith the Cognito User Pool's region, ID, and the user group.In "Execution role", create a new IAM Role and attach it a policy with the contents below. You'll need to replace

REGION,ACCOUNT_IDandUSER_POOL_ID.Click "Create function".

const AWS = require('aws-sdk');

const jwt = require('jsonwebtoken');

const jwkToPem = require('jwk-to-pem');

const cognito = new AWS.CognitoIdentityServiceProvider({ region: '' });

const userPoolId = '';

let cachedKeys;

const getPublicKeys = async () => {

if (!cachedKeys) {

const { Keys } = await cognito.listUserPoolClients({

UserPoolId: userPoolId

}).promise();

cachedKeys = Keys.reduce((agg, current) => {

const jwk = { kty: current.kty, n: current.n, e: current.e };

const key = jwkToPem(jwk);

agg[current.kid] = { instance: current, key };

return agg;

}, {});

}

return cachedKeys;

};

const isTokenValid = async (token) => {

try {

const publicKeys = await getPublicKeys();

const tokenSections = (token || '').split('.');

const headerJSON = Buffer.from(tokenSections[0], 'base64').toString('utf8');

const { kid } = JSON.parse(headerJSON);

const key = publicKeys[kid];

if (key === undefined) {

throw new Error('Claim made for unknown kid');

}

const claim = await jwt.verify(token, key.key, { algorithms: ['RS256'] });

if (claim['cognito:groups'].includes('') && claim.token_use === 'id') {

return true;

}

return false;

} catch (error) {

console.error(error);

return false;

}

};

exports.handler = async (event) => {

const request = event.Records[0].cf.request;

const headers = request.headers;

if (headers.authorization && headers.authorization[0].value) {

const token = headers.authorization[0].value.split(' ')[1];

const isValid = await isTokenValid(token);

if (isValid) {

return request;

}

}

// Return a 401 Unauthorized response if the token is not valid

return {

status: '401',

statusDescription: 'Unauthorized',

body: 'Unauthorized',

headers: {

'www-authenticate': [{ key: 'WWW-Authenticate', value: 'Bearer' }],

'content-type': [{ key: 'Content-Type', value: 'text/plain' }]

}

};

}; {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "arn:aws:logs:*:*:*"

},

{

"Effect": "Allow",

"Action": [

"cognito-idp:ListUsers",

"cognito-idp:GetUser"

],

"Resource": "arn:aws:cognito-idp:::userpool/"

}

]

} Step 6: Add the Lambda@Edge function to the CloudFront distribution

CloudFront can run Lambda functions when content is requested, or when a response is returned. These functions are called Lambda@Edge because they run at the Edge locations where CloudFront caches the content, so the user doesn't need to wait for the round-trip from the edge location to an AWS region. In this case, the user needs to wait anyways, because our Lambda@Edge accesses the Cognito user pool, which is in our region. There's no way around this that I know of.

In the CloudFront console, select the distribution you created earlier.

Choose the "Behaviors" tab and edit the existing default behavior.

Under "Lambda Function Associations", choose the "Viewer Request" event type.

Enter the ARN of the Lambda function you created in the previous step.

Click "Save changes" to update the behavior.

Step 7: Update the Content Delivery microservice

Before, our Content Delivery microservice only returned the public URLs of the S3 objects. Now, it needs to return the URL of the CloudFront distribution.

I'm glossing over the details of how Content Delivery knows which object to access. Honestly, I'm not even sure if we really need the Content Delivery microservice at this point. But I'm using it to teach you how to secure an S3 bucket using Cognito, so bear with me.

Modify the Content Delivery microservice to return the CloudFront distribution domain, something like

d111111abcdef8.cloudfront.net, for the requested content.Test and deploy these changes.

Step 8: Update the frontend code

Before, we were just requesting a public URL, and we didn't need any authentication headers. Now we need to include the auth data in the request, just like we'd already be doing for the requests that access our microservices.

Update the frontend code to include the auth data in the content requests.

Step 9: Test the solution end-to-end

Don't just test that it works. Understand how it can fail, and test that. In this case, a failure would be for non-users to be able to access the content, which is the very thing we're trying to fix.

Sign in to the app and go to a course.

Verify that the course content is displayed correctly and that the URLs are pointing to the CloudFront distribution domain (e.g.,

d111111abcdef8.cloudfront.net).Test accessing the S3 objects directly using their public URLs to make sure they are no longer accessible.

The Cloud Architecture Process

In this article and the previous two (microservices design and microservices security) we've been dealing with the same scenario, focusing on different aspects. We designed our microservices, secured them, and secured the content of our courses. We found problems and we fixed them. We made mistakes (well, I did, you're just reading I guess) and we fixed them.

This is how cloud architecture is designed and built.

First we understand what's going on, and what needs to happen. That's why these articles start with me describing the scenario. Otherwise we end up building a solution that's looking for a problem (which is as common as it is bad, unfortunately).

We don't do it from scratch though. There are styles and patterns. We consider them all, evaluate the advantages and tradeoffs, and decide on one or a few. In this case, we decided that microservices would be the best way to build this (rather arbitrarily, just because I wanted to talk about them).

Then we look for problems. Potential functionality issues, unintended consequences, security issues, etc. We analyze the limits of our architecture (for example scaling) and how it behaves at those limits (for example scaling speed). If we want to make our architecture as complete as possible, we'll look to expand those limits, ensure the system can scale as fast as possible, all attack vectors are covered, etc. That's overengineering.

If you want to make your architecture as simple as possible, you need to understand today's issues and remove things (instead of adding them) until your architecture only solves those. Keep in mind tomorrow's issues, consider the probability of them happening and the cost of preparing for them, and choose between: Solving for them now, making the architecture easy to change so you can solve them easily in the future, or not doing anything. For most issues, you don't do anything. For the rest, you make your architecture easy to change (which is part of evolutionary architectures).

I think you can guess which one I prefer. Believe it or not, it's actually harder to make it simpler. The easiest approach to architecture is to pile up stuff and let someone else live with that (high costs, high complexity, bad developer experience, etc).

Best Practices for Securing Access to S3

Operational Excellence

Monitoring: Metrics, metrics everywhere… CloudFront shows you a metrics dashboard for your distribution (powered by CloudWatch), and you can check CloudWatch itself as well.

Monitor the Lambda@Edge function: It's still a Lambda function, all the @Edge does it say that it runs at CloudFront's edge locations. Treat it as another piece of code, and monitor it accordingly.

Security

Enable HTTPS: You can configure the CloudFront distribution to use HTTPS. Use Certificate Manager to get a TLS certificate for your domain, and apply it directly to the CloudFront distribution.

Make the S3 bucket private: We already talked about this, but it's worth repeating. If you add a new layer on top of an existing resource, make sure malicious actors can't access that resource directly. In this case, it's not just making it private, but also setting a bucket policy so only the CloudFront distribution can access it (remember not to trust anything, even if it's running inside your AWS account).

Set up WAF in CloudFront: Just like with API gateway, we can also set up a WAF Web ACL associated with our CloudFront distribution, to protect from common exploits.

Reliability

Configure CloudFront error pages: You can customize error pages in the CloudFront distribution, so users have a better user experience when encountering errors. Not actually relevant to our solution, since CloudFront is only accessed by our JavaScript code, but I thought I should mention it.

Enable versioning on the S3 bucket: With versioning enabled, S3 stores old versions of the content. That way you're protected in case you accidentally push the wrong version of a video, or accidentally delete the video from the S3 bucket.

Performance Efficiency

Optimize content caching: We sticked with the defaults, but you should configure the cache behavior settings in your CloudFront distribution to balance between content freshness and the reduced latency of cached content. Consider how often content changes (not often, in this case!).

Compress content: Enable automatic content compression in CloudFront to reduce the size of the content served to users, which can help improve performance and reduce data transfer costs.

Cost Optimization

Configure CloudFront price class: CloudFront offers several price classes, based on the geographic locations of the edge locations it uses. Figure out where are your users, and choose the price class accordingly. Don't pick global “just in case”, remember that any user can access any location, it's just going to take them 200 ms longer. Analyze the tradeoffs of cost vs user experience for those users.

Enable CloudFront access logs: Enable CloudFront access logs to analyze usage patterns and identify opportunities for cost optimization, such as adjusting cache settings or updating the CloudFront price class. Basically, if you want to make a data-driven decision, this gets you the data you need.

Consider S3 storage classes and lifecycle policies: Storage classes make it cheaper to store infrequently accessed content (and more expensive to serve, but since it's infrequently accessed, you end up saving money). Lifecycle policies are rules to automate transitioning objects to storage classes. If you're creating courses and you find nobody's accessing your course, I'd rather put these efforts into marketing the course, or retire it if it's too old. But I figured this was a good opportunity to mention storage classes and lifecycle policies.