In Event-Driven Architectures your system behavior is distributed across multiple services, which communicate asynchronously using events. Single-service transactions are pretty straightforward, but when a transaction needs to span multiple distributed services (which is what we call a distributed transaction), things can get a lot more complex.

The Challenge of Distributed Transactions

In a monolithic application, transactions are typically managed by the database system. You start a transaction, perform a series of operations, and then either commit or rollback the entire thing. The database ensures that all or none of the operations are applied, maintaining data consistency.

However, in an event-driven architecture, especially a microservices one, we're dealing with multiple independent services, each potentially with its own database. We can't rely on a single database transaction to span all these services. Instead, we need to coordinate actions across multiple independent systems, each of which could fail independently.

The problem isn't just that the services are distributed. We're aiming for loose coupling via asynchronous communication, which goes against our typical transaction management techniques. We can't use synchronous communication to lock all databases, and most of our system is likely built around eventual consistency.

The question is then, how do we ensure that a series of operations across multiple services either all complete successfully or all fail without leaving our system in an inconsistent state? This is the core challenge of distributed transactions.

Strategies for Handling Distributed Transactions

That's the problem statement. Here are the solutions that we have at our disposal.

Avoid Distributed Transactions

Yes, I'm serious. This is a valid architecture decision in several cases. Sometimes what initially looks like a distributed transaction can be redesigned into a series of independent local transactions, so long as we can guarantee that the failure of one or more of those local transactions doesn't leave the system in an inconsistent state.

For example, instead of having a transaction that updates data in both an Order service and a Payment service, you might decide that the Order service should own all order-related data, including payment status. The Payment service would then only be responsible for interfacing with external payment providers and notifying the Order service of the result. Orders can be created in a Payment pending status, and if the Payment service fails to execute the payment, or fails to notify the Order service, the Order is still in a valid state. We do need to ensure at least once communication between the Payment service and the Order service, but it doesn't need to be a transaction that locks both services until the payment completes.

This strategy doesn't work for all scenarios, but it's worth considering as the first option. It might mean changing the boundaries between services, so even when it's possible it might not be the easiest solution. But it's still worth considering.

Two-Phase Commit (2PC)

Two-Phase Commit (2PC) is a consensus algorithm that ensures all participants in a distributed transaction agree on whether to commit or abort the transaction. Here's how it works:

Prepare Phase: A coordinator (a separate service or one of the participating services) asks all participants to prepare for the transaction. Each participant locks the necessary resources, attempts to apply the necessary changes, checks if they're valid, and responds whether it's ready to commit.

Commit Phase: If all participants respond that they're ready, the coordinator tells everyone to commit. If any participant says it can't commit (e.g. the changes aren't valid, it's busy with another transaction, or communication fails), or if the coordinator times out waiting for a response, it tells everyone to abort, and each services roll back their local changes. Services can also time out while waiting for the coordinator, in which case they abort the transaction.

Two-Phase Commit guarantees strong consistency in distributed transactions. It's a pretty standard solution, and it's how transactions in many distributed databases work, including Amazon DynamoDB. It's very robust, and can gracefully handle failures due to concurrent transactions, incompatible changes or communication failures. The biggest problem is that it locks all resources.

Some databases minimize the impact of the lock by locking only the tables, rows or items involved in the transaction. Another common strategy is to queue up transactions that would affect locked resources, so the database engine handles the waiting and retrying, and the service attempting the transaction only sees a slight delay and doesn't need to deal with concurrency errors and retries.

Another problem is that 2PC consumes twice as many resources, since all operations need to be applied twice: Attempted once to confirm that they are valid and can be performed, and applied a second time at the Commit phase. In case you didn't know, that's why DynamoDB Transactions consume twice the usual capacity.

You can implement 2PC for your distributed services, but it's not that common to apply directly. Still, the idea is critical for the following two solutions, so I didn't want to skip the explanation.

Saga Pattern

The Saga pattern is a more event-driven friendly approach to managing distributed transactions. Instead of trying to maintain a single, atomic transaction across services, the Saga pattern breaks the transaction into a series of local transactions, each performed by a single service.

The key is that each local transaction updates the database and publishes an event, and this event triggers the next local transaction. If any step fails, the saga executes compensating transactions to undo the changes made by the preceding steps. During the execution or rollback of the saga the system might be in a temporary inconsistent state, but at the end of the execution (or of the rollback if something fails) it will always be in a consistent state. That's eventual consistency.

There are two main ways to implement the Saga pattern: Orchestration and Choreography.

Orchestration

In this approach there's a central Orchestrator service that manages the entire process end-to-end. Its job is to know all the steps in the transaction and coordinate the execution by telling each service what to do and when.

The Orchestrator centralizes the responsibility of controlling the transaction and ensuring that it succeeds or fails as a whole, and that all necessary rollbacks and corrective actions (if any) are requested from the services. It also serves as a centralized definition of the steps involved in the transaction.

Using our e-commerce example again:

The Orchestrator receives the order request.

It tells the Order service to create an order.

It tells the Inventory service to reserve items.

It tells the Payment service to process payment.

It tells the Order service to mark the order as complete.

An orchestrated transaction

If any step fails, the Orchestrator is responsible for invoking the appropriate compensating actions to ensure the system is left in a consistent state. More importantly, it's responsible for figuring out which compensating actions need to be taken (if any).

Orchestration-based sagas are easier to understand and manage for complex processes. However, they introduce a central point of control, which can be a bottleneck or single point of failure if not designed carefully. And the communication between the Orchestrator and the rest of the services is still complex and susceptible to errors.

Note that orchestrated systems aren't event-driven. The orchestrator knows exactly who it's talking to, even if you hide the implementation details behind an API Gateway (as you should!), and even if you used a message bus. The key isn't the implementation detail, but the intent: The Orchestrator has some concept of Inventory service mapped to some identity (typically a DNS name, but it could be anything addressable), and it uses that identity to tell that conceptual service to "reserve items". That goes against the idea of event-driven architectures where services just fire events without knowledge of who might receive them or what might get done in response to them.

AWS Step Functions is a great example of an Orchestrator, and one of the best ways to deal with complex distributed transactions in AWS.

You might also have heard the term Orchestrated Microservices. That refers to microservices in which transactions are handled by an Orchestrator. You may find either a single, dedicated orchestrator for all orchestrated distributed transactions in the system, or a microservice that acts as an entrypoint to the transaction and takes on the additional responsibility of orchestrating it. In our example above, the Order service could fulfill that role.

Choreography

In Choreographed Transactions there is no central coordinator. Instead, each service listens for events from other services and decides when to execute its local transaction, or the necessary corrective actions. More importantly, each service decides what are the correct actions to execute.

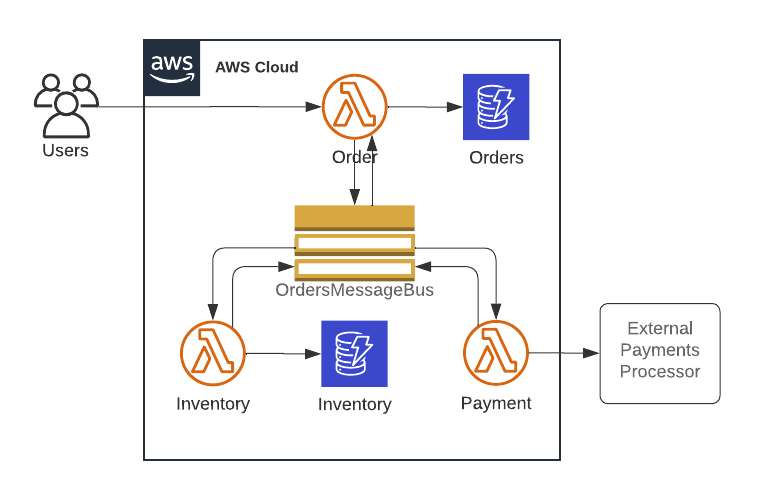

For example, in an e-commerce system:

The Order service receives a message, creates an order, and publishes an "OrderCreated" event.

The Inventory service listens for "OrderCreated", reserves the items, and publishes "InventoryReserved".

The Payment service listens for "InventoryReserved", processes the payment, and publishes "PaymentProcessed".

The Order service listens for "PaymentProcessed" and marks the order as complete.

If any step fails (e.g., payment fails), the service publishes a failure event. Other services listen for this and execute compensating actions (e.g., Inventory releases the reserved items).

A choreographed transaction

If you look at any given part of the system, you won't see a transaction, but instead a single service that performs some action when some trigger occurs. For example, all that Inventory does is reserve items and publish "InventoryReserved" whenever it receives "OrderCreated". It doesn't know who created the order or what happens afterwards. Services don't technically "know" things, but the key point here is that you can't know those things by looking at the service.

You could argue that in Orchestrated transactions you can't know that either from just looking at the Inventory service, and I agree. But there is a single place where you can get all the information: the Orchestrator. In a Choreographed transaction there is no centralized place with all the transaction logic. Instead, you have all the same transaction steps and rules but distributed across the participating services, in the form of triggers, actions to be done when those triggers occur, and messages that are published.

To implement a choreographed transaction you would typically use message buses like Amazon SNS, possibly combined with queue services like Amazon SQS to handle retries. You may also see features like Amazon S3 Event Notifications and Amazon DynamoDB Streams.

Choreography-based sagas are very flexible, because the intervening services are decoupled even at the conceptual level. If you need to add actions that need to happen when an order is created, all you need to do is make another service (possibly a new one) listen to the "OrderCreated" event.

The big downside is that it's very fucking complex. It's harder to understand, harder to test, and harder to implement complex logic like awaiting for parallel actions. Is it worth it? Usually yes for either simple interactions or transactions that are expected to change often.

Tips for Implementing Distributed Transactions

That was a long explanation! I just want to add to it a few tips, useful for all forms of distributed transactions.

Event Design

Events should be agnostic to what services handle them, and to what services emit them. However, they should include information about the actions that caused them to be emitted, and if relevant about the context in which that action occurred. Some basic things that you might want to include in your events:

A unique event ID

A unique transaction ID if it's part of a transaction. This ID should be generated by the service that initiates the transaction, and carried over across events by services that receive transaction events and emit other transaction events.

All the information that the receiver will need. For example, for the

"InventoryReserved"event you will want to include the amount of the order, which the Payment service needs to execute the payment.Information specific to the action that resulted in the event being emitted, even if it's not relevant for the services that listen for this event. For example, neither the Inventory nor the Payment services need the OrderID, but including it will help you with traceability.

Idempotency

There is no "exactly once" delivery of events. There is either "at most once" or "at least once". To ensure no messages are lost, you'll want "at least once". That means your services need to be prepared to receive duplicates, and either be able to identify and drop them, or only execute idempotent actions.

By the way, idempotency means that an action can be executed an arbitrary number of times and it will have the same result as if it was executed once. Ideally all actions in all systems should be idempotent, but in event-driven systems it's even more important. Fortunately, event IDs help a lot with this.

Compensating Actions

Compensating actions aren't just undoing exactly what we did, like deleting the single database record we inserted. We need to think about the different scenarios and the potential consequences. What happens if we're using a relational database and that row is being referenced by a foreign key in another table? We can apply a cascade delete, but should we? And what happens if we should apply a cascade delete, but we're not using a relational database engine that can do it automatically?

You also need to consider that sometimes the compensating action is not the exact reverse action. For example, if a step involved deducting money from a user's account, the compensating action isn't just adding back the same amount of money. You might need to consider fees, exchange rates (for international transactions), or other factors.

Timeouts and Partial Execution

Basically, be prepared for anything to fail at any point. But most importantly, be prepared for false positives: situations where the waiting for a step times out, but the step actually does end up occurring.

Conclusion

Distributed transactions are hard. I don't aim to make them any less hard, but hopefully you now understand a bit better exactly what is hard about them, and how to handle them even if they are hard.

Hopefully you'll be able to avoid some distributed transactions, but very likely you won't be able to avoid them all in a distributed system, and especially if you're using an event-driven architecture. They are a necessary part of distributed systems. But know that they are manageable.

Some final tips: For simple interactions that you expect to remain simple, prefer choreographed transactions (which is essentially what you're already doing if you're using an event-driven architecture). For transactions with complex rules, prefer orchestration, ideally with AWS Step Functions (no need to reinvent the wheel).