Monday, October 20th, many teams woke up to their pagers instead of their alarm clocks. AWS was down, and a big part of the internet went down with it. The cause? DNS, obviously. It's always DNS. But let's dive deeper into it, because it's pretty interesting how DynamoDB (the affected service, which resulted in a cascading failure) manages DNS.

This is based on the detailed postmortem AWS released just three days after the incident. Kudos to the AWS team, last time this happened (2023, with AWS Lambda) it took them 4 months to release a postmortem.

Back to this outage though. Yes, it was DNS. Specifically, it was a failure in the DNS of DynamoDB, a serverless NoSQL database that's a foundational service for AWS (meaning many other AWS services depend on it). In this outage, the dynamodb.us-east-1.amazonaws.com address started returning an empty DNS record, making other services incapable of connecting to DynamoDB. When I say “other services”, I mean both your own Lambda function trying to access your table, and other AWS services that use DynamoDB internally. Let's take a look into how DynamoDB manages its DNS to understand how it failed and why.

How DynamoDB Manages DNS

Diagram showing DNS management in DynamoDB

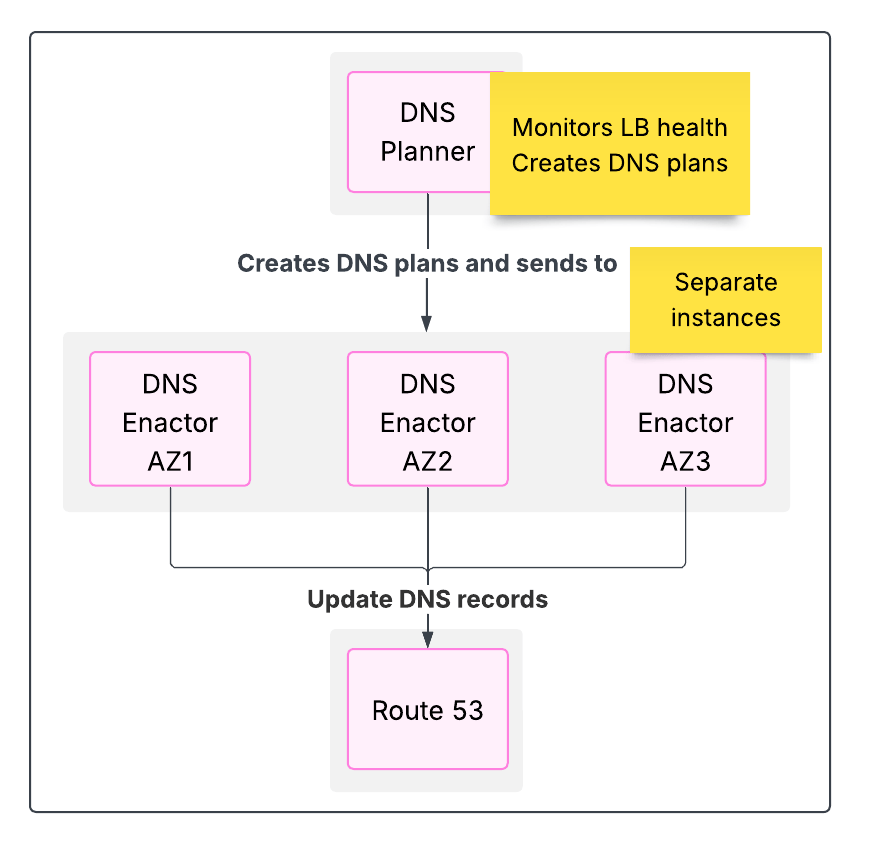

For availability reasons, there are two components:

The DNS Planner monitors the health and capacity of the many load balancers that need to receive the traffic, and periodically creates a new DNS plan consisting of a set of load balancers and weights. It doesn't apply the plan, it just creates it.

The DNS Enactor applies the required changes to Amazon Route53 following the DNS plan. This component operates three completely independent copies, one in each Availability Zone. Each instance of the Enactor applies the following:

Receive a new plan from the DNS Planner

Update the Route53 records according to the received plan, using Route53 transactions. This includes retry logic.

Clean up DNS records that are part of old DNS plans

With 3 Enactors running in parallel, race conditions should be expected. They're dealt with via eventual consistency: A DNS Enactor may enact an old plan, but since the updates are expected to happen quickly and there's a cleanup step, the next Enactor that runs can update the records with the newest plan.

How DynamoDB DNS Failed

The failured resulted from several unlikely events happening at the same time:

DNS Enactor #1 experienced significant delays due to lock contention

DNS Planner produced new plans at a higher rate than usual

DNS Enactor #2 processed these new plans at a surprisingly high rate

Here's the timeline, and how those three independent events combined into a failure scenario:

Enactor 1 started applying a DNS plan. Lots of lock contention, this took a while

DNS Planner generated many newer plans, making obsolete the plan Enactor 1 was still applying

Enactor 2 started applying a newer and valid DNS plan

Enactor 1 continued applying its old plan, overwriting the DNS records Enactor 2 had just written

Enactor 2 finished applying the newer and valid plan

Enactor 1 finished applying the plan it had (by now old and invalid), effectively overwriting the newer and valid plan Enactor 2 had applied

Enactor 2 executed its cleanup routine: Delete records that aren't part of a valid plan. This meant it deleted all the DNS records, since all DNS records were from the old plan Enactor 1 had been applying

Further executions of Enactor instances could not handle the inconsistent state of empty DNS records, so failed to apply newer and valid plans

With no DNS records, DNS queries for dynamodb.us-east-1.amazonaws.com were returning no results, and nobody could connect to DynamoDB. This wasn't a problem for the Enactors (they don't connect to DynamoDB), but it was an inconsistent state and they didn't know how to deal with it, so couldn't apply new DNS plans. And that's how DynamoDB broke.

Flow diagram of the events that led to the DynamoDB failure

How To Fix DynamoDB

So, now we know how DynamoDB broke. And since many AWS services are dependent on it, this took down a significant portion of AWS, and a significant portion of the internet with it.

The above sections were based on information confirmed by AWS via their outage postmortem. What follows is my own ideas, not endorsed or sanctioned by AWS. In all honesty, these ideas are likely incorrect (if they were, I'd be working at AWS). There are so many details to take into account when working at the staggering scale of AWS that the vast majority of us have never faced and will never face, and I'm sure to do a bad job at it. However, I'll do my best to explain why most ideas that sound good will likely not work.

Idea 1: Single Enactor

You can completely eliminate race conditions if you have a single Enactor. This would definitely prevent the failure that occured in this occasion, but it would expose DynamoDB DNS to Availability Zone failures.

Imagine a scenario where the Availability Zone that hosts the Enactor fails. This also means a significant portion of the Load Balancers that run behind DynamoDB will be failing, and without a functioning Enactor your DNS records won't be updated, sending traffic to failing endpoints.

You could argue that this may be fixed by running multiple copies of the Enactor in a primary and standby architecture. The problem with this is that you can't assume the primary Enactor will always fail in a detectable way. If it starts failing silently, you won't execute your failover strategy and will need to live with stale DNS records, which at this scale of tens of thousands of servers means losing a significant portion of your capacity simply because you can't communicate with new instances, and will only realize it when it becomes a significant problem.

The only way to detect a silent failure is with a parallel process that checks the results. Meaning, a second Enactor running in parallel. And we're back to the original problem of dealing with multiple Enactors acting concurrently.

Idea 2: Continuously Validate the Plan

A key point of the problem DynamoDB experienced was that the Enactor that was enacting the old plan only performed once the validity (or rather staleness) check of the plan. If it had checked again, it would have realized the plan was stale and no longer valid, and would have stopped applying it.

The problem with this is how fast and how often these updates are expected to occur. Remember, we're thinking in a scale that completely baffles every system you and I have ever built. The DynamoDB us-east-1 endpoint gets in a single hour more traffic than any system I've ever built across its entire lifetime.

This change might actually work. We could even have some sort of timeout, or validity timestamp for the plan. Honestly, I don't know what would be the problem with this idea, but I'm guessing there's something. I'll try to ask someone at re:Invent, and update you on the answer.

Idea 3: Make an Inconsistent State a Valid State

That sounds self-contradicting, and perhaps it is. But ultimately, this idea proposes not trying to prevent this failure mode, but rather accepting that this or something like this may will happen again, and automating the remediation.

The way I see it, the Enactor that ran right after all DNS records had been cleaned up did know what the desired state was: That of the plan it had received from the DNS Planner. It didn't know how to deal with the current state of empty DNS records, which in its “mind” didn't make sense. But it knew what the desired state was. It could have just applied that.

Or it could have triggered an alarm notifying that it could or would not update the DNS records, which wouldn't have automatically solved this, but would have saved the DynamoDB team some time trying to find the root cause.

This is what I would do, if I worked at AWS. I'll accept some AWS credits for the idea 😀.

Closing Thoughts

If you were expecting some sort of multi-region advice, you can read my Disaster Recovery article. This isn't about what you and I can do. And it's not really about what AWS should do, like I said we do not have the right experience to work on these systems.

This is about using our heads and trying to learn our own lessons from this. And honestly, about having fun. The outage wasn't fun, but learning about cool tech stuff certainly is.