Batch computing is a common thing to do when you don't need results immediately. The idea is that instead of running tasks interactively, you submit a batch of jobs to be executed independently, often on a schedule or as resources become available. That way, you don't need to rush to provision resources the moment a job comes in. Instead, you can keep a set of long-running resources already configured, and arrange your jobs to run there as capacity frees up. Much simpler to manage since you can plan ahead, less overhead of provisioning resources on demand, and in AWS you can take advantage of cheaper purchase options like reserved instances or savings plans.

Of course, that planning ahead, arranging jobs, and the async communication that it entails don't happen on their own. Lucky for us, AWS has a managed service to handle all of that: AWS Batch.

In this article, we'll dive deep into AWS Batch. We'll start by understanding the basics, and then move on to strategies for improving cost and performance, designing efficient job workflows, monitoring and debugging jobs, and implementing security best practices. I'll also tell you about an architecture I designed using AWS Batch.

Understanding AWS Batch Components

AWS Batch is a fully managed service that lets you queue up and run async jobs in batches. It handles defining jobs, configuring compute environments, and executing those jobs. At a high level, AWS Batch consists of four key components: Compute Environments, Job Queues, Job Definitions, and Jobs.

Job definitions are a template for specifying job parameters and configurations. Job queues manage the scheduling and execution of jobs. Compute environments provide the infrastructure and resources where jobs are actually executed, like EC2 instances. Jobs are the actual things being run.

When you submit a job to AWS Batch, it gets placed in a Job Queue based on its priority and resource requirements. AWS Batch then finds an available instance in the associated Compute Environment that meets those requirements, launches a Docker container based on the Job Definition, and executes the specified command. The job runs independently and can write output to Amazon S3 or other storage services.

Compute Environments

Let's take a closer look at Compute Environments, since they're where the actual computing happens. They can be either Managed or Unmanaged, depending on how much work you want to do yourself. (Kidding! Who would want to work more? Oh, right, people not using AWS 🤣).

With a Managed Compute Environment, AWS Batch manages the capacity and instance types of the compute resources. You can use a combination of Amazon EC2 On-Demand Instances and Amazon EC2 Spot Instances, and AWS Batch will create and destroy instances for you. Kind of like an auto scaling group (in fact, it uses exactly that behind the scenes), but instead of CloudWatch sending the signals to scale, Batch does it.

Alternatively, you can use AWS Fargate and Fargate Spot capacity in your managed compute environment. When you do that, Fargate scales automatically to run your containers, so you don't need to care about instances.

As a general rule, use AWS Fargate if:

You need to start jobs in less than 30 seconds.

Your jobs require no GPU, 4 vCPUs or less, and 30 GiB of memory or less.

You prefer a simpler configuration, or are a first-time user.

Unmanaged Compute Environments, on the other hand, let you take full control of the instances. You're responsible for launching and configuring the EC2 instances, installing the necessary software, and registering them with AWS Batch. That's more work! But sometimes (especially in highly regulated environments) you really need the fine-grained control.

When creating a Compute Environment, you also need to specify a compute resource allocation strategy. This determines how instances are provisioned and terminated as job demand changes. The two options are BEST_FIT, which tries to find the most suitable instance type based on vCPU and memory requirements, and BEST_FIT_PROGRESSIVE, which starts with the smallest suitable instance type and scales up progressively as needed.

Job Queues

Next up are Job Queues, which are where you submit jobs that you want to be run. Each Job Queue is mapped to one or more Compute Environments, and jobs are dispatched to instances based on their priority and resource requirements.

When you create a Job Queue, you specify a priority value between 0 and 1000, with higher values getting preference. You can also set a maximum vCPUs limit to prevent the queue from consuming too many resources.

One key aspect of Job Queues is that they support priority-based scheduling. Jobs with higher priority values are always dispatched first, even if there are older jobs with lower priorities. This allows you to ensure that critical jobs are executed promptly, while less important jobs can wait their turn.

Job Definitions

Job Definitions are templates for batch jobs, very similarly to how Amazon ECS Task Definitions are templates for ECS Tasks. In the Job Definition you specify the Docker image to use, the command to run, the vCPU and memory requirements, and any environment variables or mount points needed.

When creating a Job Definition, you have a few options for specifying the Docker image. You can use an image from a public registry like Docker Hub, or you can use a private image stored in Amazon ECR. If you're using a private image, you'll need to make sure that your Compute Environment has the necessary permissions to pull from ECR.

In addition to the basic job parameters, Job Definitions also support environment variables and parameter substitution. This allows you to create generic Job Definitions that can be customized at runtime based on the specific job parameters.

For example, let's say you have a batch job that processes files from an S3 bucket. Instead of hardcoding the S3 bucket name in the Job Definition, you can use an environment variable like $S3_BUCKET. Then when submitting the job, you can specify the actual bucket name as a runtime parameter. Neat, huh?

Jobs

Finally, we have the actual Jobs themselves. A job represents a single unit of work, like processing a file or running a simulation. When you submit a job to AWS Batch, you specify the Job Definition to use, any runtime parameters or overrides, and the Job Queue to submit to.

Jobs can be submitted either individually or as part of an array. Array jobs allow you to easily submit multiple related jobs that differ by a single parameter, such as an input file or a configuration value.

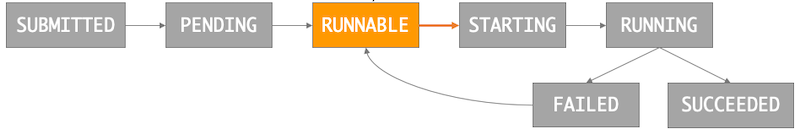

As jobs are executed, they transition through a series of states. The key states to be aware of are:

SUBMITTED: The job has been submitted to the queue and is waiting to be scheduled.

PENDING: The job has been scheduled to a Compute Environment but is waiting for an instance to become available.

RUNNABLE: An instance has been allocated and the job is ready to start.

STARTING: The job is starting up and the Docker container is being launched.

RUNNING: The job is actively running and executing the specified command.

SUCCEEDED/FAILED: The job has completed, either successfully or with a failure status. If it failed, it can be retried, i.e. moved back to Runnable.

States and transitions for a Batch job

Improving Cost and Performance in AWS Batch

Now that we've covered the basics of AWS Batch components, let's talk about optimization. Here are a few things that you need to keep in mind.

Selecting Instance Types

Selecting the right instance types and sizes is one of the most important things for optimizing anything that uses instances, I think. But of course that's much easier said than done! Here are some factors to consider for the size of your instances:

vCPU and memory requirements: Make sure to choose instance types that have enough vCPUs and memory to support your jobs. Jobs run in containers, so multiple jobs can be fit into an instance. But if you use small instances, big jobs won't fit into any instance. You can always increase the size of instances later though, so I'd suggest you start with something small or medium and grow from there.

Instance family: EC2 instances come in several families optimized for different use cases, like compute, memory, storage, and GPU processing. Choose the family that best matches your workload requirements. Always choose the latest generation!

Network performance: Some instance types have higher network bandwidth than others. If your jobs involve transferring large amounts of data, consider using an instance type with enhanced networking.

Spot or On-Demand: Spot Instances can be a great way to save money on batch workloads, but they come with the risk of interruption. If your jobs can tolerate occasional interruptions and retries, Spot Instances can offer significant cost savings over On-Demand.

Pricing: Of course, you'll want to pay as little as possible! Look for instance types that offer the best balance of price and performance for your specific workloads.

Using EC2 Spot Instances

EC2 Spot Instances are typically recommended for non-critical workloads. Guess what, your batch processing isn't critical! Yeah, I know it's reeeeally important. But it's not real-time, and it's not urgent. No spot instances available? That's alright, the batch job can wait! That's the very definition of batch processing.

Spot Instances are spare EC2 capacity that you can get at a discounted price, with the catch that they can be interrupted by AWS with two minutes of notice, and that sometimes you can't get some instance types or families (because there are no spares at the moment).

As a rule of thumb, use Amazon EC2 Spot if:

Your job durations range from a few minutes to a few dozen minutes.

The overall workload can tolerate potential interruptions and job rescheduling.

Long-running jobs can be restarted from a checkpoint if interrupted.

A couple of tips for you regarding Spot instances on AWS Batch:

Use a Spot allocation strategy: When you create a Compute Environment, you can specify an allocation strategy of either

BEST_FITorBEST_FIT_PROGRESSIVE, like we saw above. When you add Spot instances to that Compute Environment, you can use two additional allocation strategies.SPOT_CAPACITY_OPTIMIZEDminimizes interruptions by selecting instance types that are large enough to meet the requirements of the jobs in the queue.SPOT_PRICE_CAPACITY_OPTIMIZEDdoes the same, while also trying to select instances with the lowest possible price.Diversify across instance types and families: To increase the chances of finding available capacity and reducing costs, don't just try to get the exact very best match instance family for your Spot instances. Some other instance families and types might be slightly less cost-effective, but it's cheaper to use those close-to-optimal instances as Spot instances than to have to default to On-Demand instances if your preferred family and type isn't available in Spot.

Set a maximum Spot price: Spot instance prices change, depending on how much spare capacity AWS has. You can set a maximum Spot price that you're willing to pay for Spot instances. If the Spot price exceeds this threshold, AWS Batch will not launch new instances.

Handle Spot interruptions gracefully: Because Spot Instances can be interrupted at any given moment, it's important to design your jobs to handle interruptions gracefully. This may involve checkpointing progress, retrying failed jobs, or using a distributed workload that can tolerate node failures.

Use a mix of On-Demand and Spot Instances: For critical jobs that can't tolerate interruptions you should use On-Demand Instances. You can combine them with Spot instances, so that high-priority jobs always have available capacity in On-Demand, while less critical jobs use Spot and save you money.

Right-Sizing Instances

Wait, isn't this the same as selecting instance types? Well, yes, a bit. Less about instance family and generation, more about size (you know, large, xlarge, etc). But right-sizing is also a continuous effort, so that's why it deserves its own title. Yeah, totally not about SEO.

So, you already picked your instance type and size. But it was an educated guess, right? It's not like you're doing some really serious CPU and memory profiling before running a job. Then, once you know more about the jobs you're running, and especially once you have some real-world data on how those jobs run, you can review that educated guess and right-size those instances. Here's what you want to look into, once you're running some jobs:

vCPU utilization: Are your jobs consistently using all of the allocated vCPUs, or is there a lot of idle time? If utilization is low, you may be able to use a smaller instance type.

Memory utilization: Are jobs frequently hitting memory limits, causing out-of-memory errors or performance issues? If so, you may need to use a larger instance type or adjust the job's memory requirements.

Job duration: How long are jobs taking to run on average? If most jobs are completing very quickly (e.g. less than a minute), you may be able to use smaller, less expensive instances.

To get these utilization metrics, you can use tools like AWS CloudWatch, which I'll cover in the monitoring section. The point about right-sizing is that it's a continuous effort. The right size will change, and your selected size needs to change accordingly.

Designing Efficient Batch Job Workflows

In addition to optimizing the underlying compute resources, you can also design your batch job workflows to be more efficient and scalable. Two key strategies here are parallelizing jobs and implementing job dependencies and sequencing.

Parallelizing Jobs

Many batch computing workloads can do stuff in parallel - that is, breaking the work up into smaller, independent pieces that can be executed simultaneously. This can dramatically improve job performance and reduce overall runtime. But, it's not always possible, and even when it is it might require some changes.

There are a few different ways to run parallelized batch jobs on AWS Batch:

Array Jobs: Array jobs allow you to submit multiple related jobs that differ by a single parameter. This is an easy way to parallelize workloads that involve processing many similar files or datasets. We covered these in the first section, remember?

Parallel Containers: You can also run multiple Docker containers within a single job, either on the same instance or spread across a cluster of instances. Honestly, this isn't recommended for most stuff. The general advice is one thing -> one container. But some parallel processing frameworks like Apache Spark or Hadoop are designed to work like this, and those are the exceptions to the advice.

AWS Batch Job Queues: By using multiple Job Queues with different priorities, you can parallelize jobs across queues while still maintaining relative priorities. For example, you might have a high-priority queue for critical jobs and a lower-priority queue for background tasks.

And I can't leave you to parallelize jobs without giving you a best practices list:

Make sure that the jobs you want to parallelize are actually independent and can run in parallel without conflicts or race conditions.

Understand external dependencies (like a database that your jobs read/write to) and make sure you aren't creating race conditions or deadlocks when trying to access these resources. If those external dependencies scale, understand how they scale! For example, here's how DynamoDB scales.

Don't overload instances by running too many parallel tasks. I mean, things scale, but it's not always as 100% seamless as the sales brochure says.

Use appropriate error handling and retry logic to handle failures in individual parallel tasks.

Monitor parallel jobs using CloudWatch or other tools to identify any performance bottlenecks or issues.

Job Dependencies and Sequencing

In some cases, batch jobs may have dependencies on each other, where one job must complete successfully before another can start. For example, you might have a data processing pipeline where files must be downloaded and pre-processed before they can be analyzed.

AWS Batch supports job dependencies using the dependsOn parameter when submitting jobs. You can specify one or more job IDs that must complete successfully before the next job can start.

You can also use job sequencing to implement more complex workflows and pipelines. One common pattern is to create a "control" job that submits other jobs in a specific order based on dependencies. The control job can monitor the status of its child jobs and take action based on their results, such as retrying failed jobs or continuing to the next stage of the pipeline.

Some other job dependency and sequencing patterns include:

Fan-out/fan-in: A single job fans out to multiple parallel child jobs, which then fan back in to a final aggregation or completion step.

Conditional execution: A job only executes if certain conditions are met, such as the success or failure of previous jobs.

Polling for completion: A job periodically checks the status of its dependencies and only starts when they are complete.

AWS Batch does support all of this. But honestly, if you're at the point where you're considering all of this stuff, you probably want to use Step Functions. You can have one state in a Step Functions state machine be a job in AWS Batch, so the processing remains the same, but you use Step Functions to control all the complex sequencing logic.

Monitoring and Optimizing Batch Job Performance

Once your jobs are up and running, it's important to monitor their performance and identify opportunities for optimization. Of course, this falls into the responsibilities of AWS CloudWatch Metrics and Logs. CloudWatch is not a difficult service, but let's see what kind of things you want to monitor.

Key Metrics and Logs

Some of the key metrics to monitor for AWS Batch jobs include:

Job status: The current status of each job (

SUBMITTED,PENDING,RUNNABLE, etc.), which can help identify jobs that are stuck or failing.Job duration: The total elapsed time for each job, which can help identify performance bottlenecks or inefficiencies.

vCPU and memory utilization: The actual usage of vCPU and memory resources by each job, which can help with right-sizing instances, like we saw a couple of sections earlier.

Queue metrics: The number of jobs in each queue, the number of jobs completed, and the average time spent in the queue, which can help identify capacity issues or inefficiencies.

In addition to metrics, AWS Batch also generates detailed logs for each job, including the job's stdout and stderr output, as well as system logs. Well, they're detailed if you bother to log stuff in your code, I guess. But Batch does take care of logging everything that happens around your code, and sending all logs to CloudWatch Logs.

You can view those logs from the AWS Batch console or CLI, or view them directly in CloudWatch Logs.

Performance Bottlenecks and Optimization

The whole point of monitoring is identifying performance bottlenecks and optimization opportunities. Look for this stuff:

Resource contention: If multiple jobs are competing for the same resources (e.g. disk I/O or network bandwidth), that can cause performance issues. Adjust job scheduling or use dedicated resources for high-priority jobs to fix this.

Inefficient algorithms or code: If jobs are taking longer than expected to run, it may be because your code is bad. Yeah, sorry, but it happens to all of us. But hey, at least with monitoring you can identify those problems!

Data transfer bottlenecks: If a job needs to transfer transfer a lot of data to or from S3 or other storage services, it can cause a performance bottleneck. Consider using techniques like parallel S3 downloads, data compression, or caching to reduce transfer times. Also, please check that you are using VPC Endpoints.

Instance type mismatches: If jobs are consistently hitting resource limits or underutilizing instances, it may be a sign that the instance type is not the best one for that job. For example, if you're hitting memory limits and still have CPU to spare, you might want to change your instance family to one thats optimized for memory.

Again, optimization is an ongoing process, not a one-time task. You don't need to do everything again every day, but at least check frequently.

Best Practices for AWS Batch Architecture and Security

As usual, security comes in last! 😱. Let's cover some best practices for architecting and securing your AWS Batch workloads.

High Availability, Scalability and Resilience

I know, this article has been full of lists. Well, here's another one. Do this:

Understand what High Availability, Scalability and Resilience are.

Decide whether those characteristics matter to you! Don't just do them because a checklist says so.

Use multiple Compute Environments and Job Queues to distribute workloads and handle different job priorities or requirements.

Configure automatic scaling for your Compute Environments based on job queue metrics like job queue length or job status.

Use Spot Instances to optimize costs for non-critical jobs.

Use multiple Availability Zones to spread the risk of interruptions and tap into different instance pools.

Reduce job runtime or implement checkpointing to minimize the impact of interruptions on long-running jobs.

Use automated and custom retry strategies to handle interruptions and ensure jobs are completed successfully.

Implement appropriate error handling and retry logic to handle transient failures or instance interruptions. The basic version of this means at least logging when a job fails, and probably notifying. The more advanced version can grow as complex as needed, but you don't always need to go beyond just notifying.

If things do get a lot more complex, use AWS Step Functions to orchestrate complex job workflows and handle failures gracefully.

If your jobs run many steps, consider splitting them into several sequenced jobs and using Amazon S3 or Amazon DynamoDB as an intermediate storage.

Security Best Practices

Alright, final list of this article. Promise! This one's different though, I recommend you do all of these.

Use IAM roles and policies to control access to AWS Batch resources and limit permissions. As always, use the least privilege principle.

Encrypt data at rest and in transit using AWS Key Management Service (KMS).

Use private subnets and security groups to isolate Compute Environments and control network access.

Implement secure configuration practices for Docker images and containers, like only using trusted base container images, minimizing dependencies, and regularly scanning for vulnerabilities.

Use AWS Secrets Manager or AWS Systems Manager Parameter Store to store and manage secrets like database credentials or API keys. Please don't commit them to your repo!!

Real-life Example: Using AWS Batch to Security Scan files

Here's a real thing I did using AWS Batch, for one of my consulting clients. I can't give you the full details, I only got permission to share this anonymized, but the goal of the system was to do a very specific security scan of a specific type of files. The File Scanner was already provided by my client's client as a Docker image, all my client had to do was use it to generate a JSON result, and then call GPT-3.5 to analyze that. Here's what the final result looks like:

Architecture using AWS Batch

The logic is rather simple:

Upload file to a temporary storage

Run File Scanner in a container and save result

Call GPT-3.5 to parse that and generate another result

Store that other result and serve it to the users as needed

The challenge that led us to use Batch is that File Scanner could take from 10 minutes to a couple of hours to scan (the files ranged from 100 MB to 2 GB). We could have done it inside a long-running task, but we wanted to keep track of failed jobs and be able to retry them easily (which we solved with automated retries).

The part that comes after Batch is also interesting. The incorrectly named Enqueue lambda on the bottom left of the diagram was already written by the time they called me. So was the API, running in a single EC2 instance. I marked these as areas for improvement and told them how it could be simpler. But I also acknowledged that with that already implemented the path of least resistance was to use what they had, so that's how I designed it.

Conclusion

Alright, we've covered a lot of things. We started with the key components of AWS Batch, including Compute Environments, Job Queues, Job Definitions, and Jobs. Then we talked about strategies for optimizing cost and performance. We talked about efficiency and designing efficient batch job workflows by parallelizing jobs and implementing job dependencies and sequencing. I gave you some best practices for monitoring job metrics and logs using CloudWatch, identifying performance bottlenecks and optimization opportunities, and for blaming everything on your code. Nearing the end we discussed some architectural best practices for high availability, scalability, resilience, and security. And as a finishing move I told you about a real architecture I designed using AWS Batch.

I'll leave you with just one takeaway: Keep your architecture as simple as possible, and use AWS Batch only for things that are batch processes at the business level. AWS Batch is so good you can do nearly anything with it, including building a CI/CD Pipeline, creating an event-driven workflow, or doing database backups. But it's the wrong tool for that. Let the business drive your architecture, and when the business dictates something needs to be processed in batches, then use AWS Batch.