Part 1 of this series, Architecture Concerns, dealt with architectural concerns regarding serverless in general and AWS Lambda in particular. Part 2, Architecture Design, explored those same concerns in an example. Part 3 (this one) is less about architecture per se, and more about best practices.

I've often argued that architecture is a set of decisions and tradeoffs, and I purposefully blur the line between architecture and design. To be fair, this post is probably 90% design and only 10% architecture. However, as an architect I've found that I can add value by explaining best practices and helping implement them, which is mostly advice on design. Hopefully you'll find this post helpful, and will be able to add value that way.

Understanding AWS Lambda: An Overview

We've talked about this a lot, especially in 20 Advanced Tips for AWS Lambda and Architecting with AWS Lambda: Architecture Concerns. Let me go over it real quick, or feel free to skip to the next title.

AWS Lambda: The Basics

AWS Lambda is a serverless compute service where you create functions, define event sources as triggers, and when those triggers occur a new execution of that Lambda function starts, reusing an existing execution environment or creating a new one. As with anything serverless, there are servers, but it's not your responsibility to manage them.

Key terms:

Function: A resource that includes your code, configurations (memory, networking, etc) and triggers. It's essentially a definition that will be instantiated when the trigger occurs.

Event: The bundle of data that AWS Lambda receives when the trigger occurs.

Execution: An instance of a Lambda function, which receives the event and runs your code.

Execution environment: A virtual server where executions can run. Execution environments are managed by AWS Lambda, and are reused across executions.

Key Benefits of Using AWS Lambda

The biggest benefit of AWS Lambda is how fast execution environments are created, compared to EC2 instances or Fargate containers. Scaling out takes single-digit seconds instead of minutes, which means you can keep your excess capacity at 0 (you don't manage it anyway, nor pay for it, you only pay for execution time) and scale when needed.

Another benefit is that many infrastructure concerns become configuration concerns: How much memory, environment variables, networking configuration, etc.

Finally, integrating it with other AWS services is really easy: You can trigger Lambda functions from changes in DynamoDB tables, uploads to S3, alarms in CloudWatch, HTTP(S) requests using API Gateway, workflows in AWS Step Functions, and a long list of other things.

Starting a New Serverless Project with AWS Lambda

AWS in general is very far from trivial, as proven by the fact that I have written 50 articles about it and 3600 people read them. Coding isn't trivial either. In most projects, we split that complexity between cloud/devops engineers and software engineers (devs). However, with Lambda a lot of those infrastructure concerns become configuration issues, and the responsibility for that moves from cloud engineers to devs. Meaning, devs need to really understand how AWS Lambda works to use it effectively. That's where an AWS expert can provide significant help, and especially at the beginning of the project.

Organizing Code in a Lambda Function

The entry point for a Lambda function is the Lambda handler method, which is run when the execution starts, and receives the event that triggered the execution. That method is in a file usually called handler.(js/ts/py/java/go/etc). All the top-level code in that file, by which I mean code outside the handler method, is executed once when the execution environment starts (i.e. on a cold start), and it isn't executed during an execution. Initialize there any resource that will be reused across executions, such as database clients or connections.

Screenshot of code in a Lambda function

After that, use the handler method to extract the relevant information from the event, call other methods to do stuff with that, and send the response (if any). Follow the single responsibility principle in structuring your code: have one method do one thing, and group them in modules.

Overall, treat a Lambda function as a single service. And... write good code? Sorry, I can't summarize good coding practices in two paragraphs. There's nothing specific to serverless about this part.

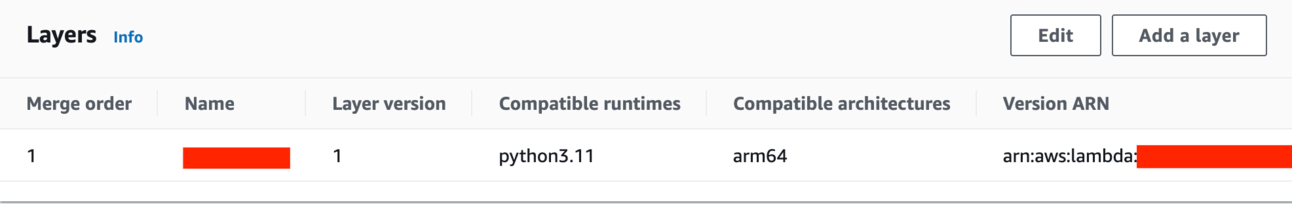

Effective Use of Lambda Layers

AWS Lambda layers are a way to share code, dependencies, and even custom runtimes across multiple functions. They make it easier to reuse code and manage shared dependencies, especially versions of libraries.

The key point of layers is managing dependencies that need to be shared across multiple functions. By centralizing these dependencies in layers, you can easily version shared code and manage versions of libraries, and update them across all functions much more easily, ensuring that your functions maintain consistency of their dependencies.

Additionally, by moving dependencies to layers, you can optimize the deployment package size of your Lambda functions, resulting in faster deployments.

Essentially, you create a layer like this and upload it to your AWS account. Then you configure a function to use that layer (you can use multiple), and it's as if the function contained those modules. Of course, you should do this with an Infrastructure as Code tool, for example here's how to do it with AWS SAM.

Screenshot of a Lambda Layer in the AWS Lambda console

Managing Multiple AWS Lambda Functions

Overall, you should treat them as different microservices (yes, I said the word). They're not always (or often) microservices, because a microservice owns its data, but code-wise and management-wise you should follow most of the good practices for microservices (or rather, for independently scalable services):

Statelessness: Ensure that your functions are stateless; any state should be stored in a database or cache.

Idempotency: Make sure your functions can be retried safely without unwanted side effects, especially in the face of failures.

Fine-grained Permissions: Assign the least privilege IAM roles to each function to limit the blast radius of security issues.

Monitoring and Logging: Implement detailed monitoring, logging, and alerting to quickly identify and respond to issues.

Cold Start Optimization: Minimize initialization code and dependencies to reduce cold start times, considering provisioned concurrency where needed.

Decoupling: Consider event-driven architectures with SNS, SQS, or EventBridge to decouple components and enable asynchronous processing.

Timeouts and Retries: Set appropriate timeouts for your functions and handle retries to ensure resilience.

Local Development: Use tools like SAM or Serverless Framework for local testing and deployment automation.

Resource Optimization: Monitor and adjust memory and resource allocations based on actual usage patterns to optimize cost.

Distributed Tracing: Implement distributed tracing to monitor and debug complex transactions across multiple services.

Rate Limiting: Implement throttling and rate limiting to protect your services and downstream systems from spikes.

Caching: Use caching mechanisms like ElastiCache or DynamoDB DAX to improve response times and reduce the load on downstream services.

CI/CD: Create CI/CD pipelines for automated testing and deployment.

You can read more about this at Managing Multiple AWS Lambda Functions.

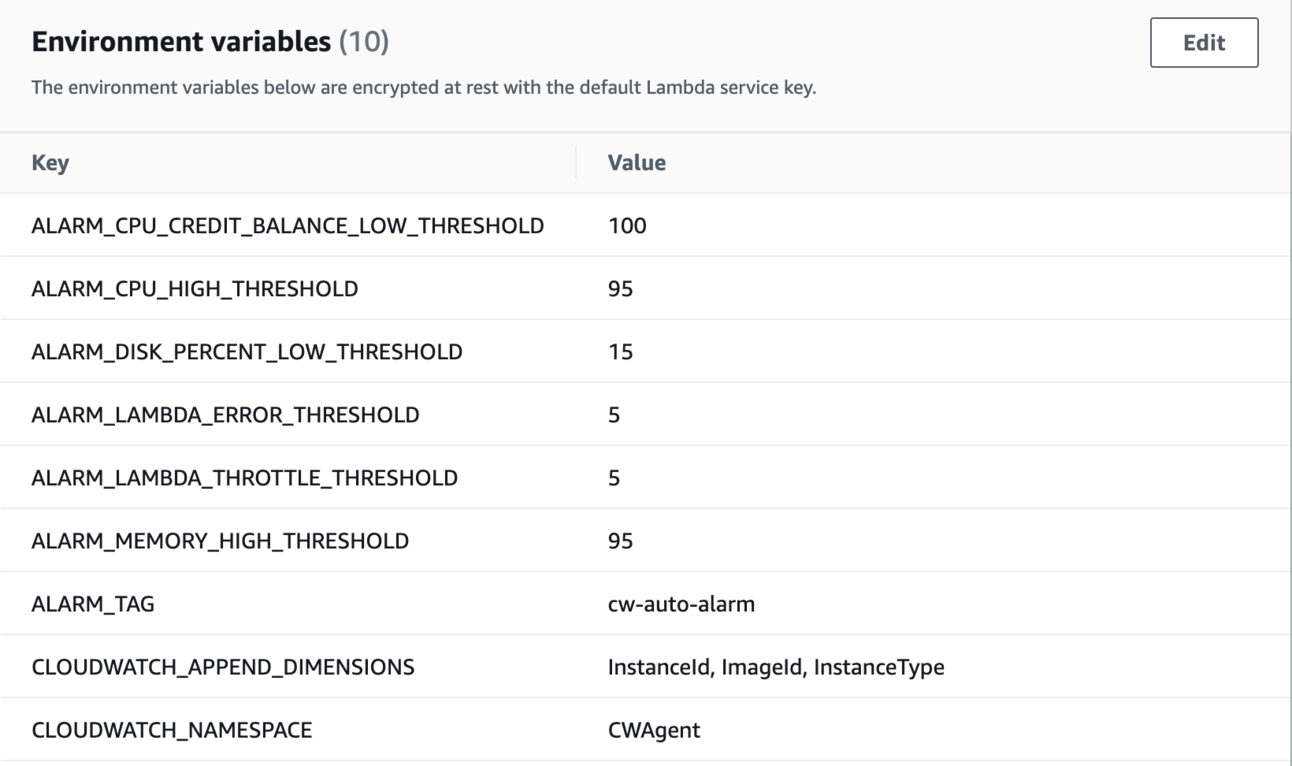

Configuring Environment Variables

Environment variables are a configuration in Lambda functions, meaning you don't need a separate service like Systems Manager Parameter Store. They aren't encrypted, so you'll still need Secrets Manager for secrets. Aside from that, they work just like environment variables in any other platform.

The overall recommendation, which should be obvious but it's something that I see a lot of teams doing wrong, is to pass as environment variables any value that varies per environment. Told you it should be obvious! But seriously, if you're using or helping someone use AWS Lambda, pay attention to this.

Screenshot of environment variables in AWS Lambda

Setting the Right Memory and CPU Settings

Fine-tuning the memory size is essential to get the right balance of performance and cost. CPU is tied to memory, so increasing memory increases the amount of CPU assigned to that function. In most cases, you should pay a lot of attention to the minimum amount of memory your function needs to run well, which depends mostly on the libraries that you're using and the programming language you picked (Java uses more memory, obviously). In some cases, depending on what your function does, the amount of CPU will make a big difference.

Table of how much CPU a Lambda function gets per range of memory (MB)

Setting Up an IAM Role

As you know, to access anything in AWS you need credentials with the right permissions. Don't put these in the code! Don't even put it in environment variables. Instead, you need to set up an IAM Role for the Lambda function, which has the necessary permissions and not more (this is called least privilege principle). When your code attempts to access AWS resources, the virtualization layer that AWS uses captures your request and signs it with that IAM Role, so it uses that Role's permissions. This way, you don't create long-lived credentials, and don't put them anywhere.

Error Handling in AWS Lambda

There are two things that are inevitable: the heat death of the universe, and errors in software. Configuration issues, typos, an environment randomly failing, a wider outage, a downstream error, a malformed request, and many other things can cause the heat death of the universe. I mean, can cause errors in your Lambda functions. Let's talk a bit about what you can do about them.

Understanding Dead Letter Queue (DLQ) and Retries

When a Lambda function fails, AWS provides mechanisms to handle those failures gracefully. By default, AWS Lambda will attempt to retry an asynchronous invocation twice before considering it a failure (you can configure this number). But what happens after those retries?

Enter the Dead Letter Queue (DLQ). A DLQ is an SNS topic or SQS queue where AWS Lambda can direct the event that caused a function to fail. This ensures that you don't lose any data and gives you the ability to manually process the failed event later, either for transaction purposes or just for reproducing the error.

Implementing a DLQ is as simple as designating an SNS topic or SQS queue in your function's configuration. Once set, failed events, along with information about the function, context, and the error, will be sent to the DLQ.

Pro tip: always monitor your DLQ. A growing DLQ can be an early indicator of systemic issues that need addressing. And by monitor I mean set up metrics and alerts!

Screenshot of how to configure a DLQ in the AWS Lambda console

Versioning and Aliases

As your application evolves, your Lambda functions will too. But how do you manage updates, especially in a production environment, without causing disruptions?

Lambda allows you to publish immutable versions that have their own Amazon Resource Name (ARN). You can reference a specific version of a Lambda function, letting you update and roll back easily. When you create or update a function, you're actually working on the $LATEST version, which is a moving pointer to the latest version.

Just like there is $LATEST, you can create your own aliases for your functions. An alias is just a pointer to a version, which you can update to point to another version at any moment. Lambda aliases have their own ARN as well, meaning you can target aliases from other resources (e.g. from an AWS API Gateway API), and update the alias whenever you want, thus automatically pointing those other resources to the newest version of your code (or the previous one, if you broke something). It's really common and highly recommended to have DEV and PROD aliases.

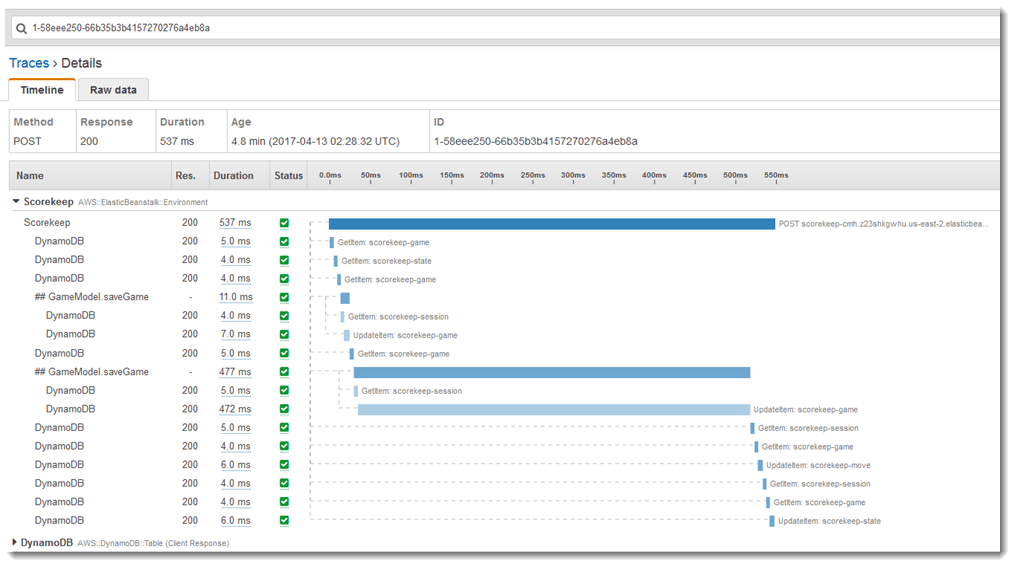

AWS Lambda Monitoring and Tracing

Monitoring and Tracing essentially means being able to figure out what on Earth is going on with your Lambda functions. Sounds easy, but when you're running 40 different functions, the average transaction involves at least 4 of them, and some are literally called by your database, understanding the big picture can get a bit complex. Here's what to do in AWS Lambda.

Viewing Metrics in Amazon CloudWatch

Amazon CloudWatch integrates seamlessly with AWS Lambda, showing you all the metrics in beautiful (ok, not so beautiful) graphs. You can even use CloudWatch to create alerts on those metrics, and get notified about them.

Key metrics to monitor:

Invocation Count: Number of times your function has been called.

Duration: How long your function takes to run.

Error Count: Number of invocations that resulted in an error.

Configuring AWS X-Ray for a Lambda Function

CloudWatch can help you understand one function. But when a use case spans several functions, you need to follow the trail and view all of those functions as a group to understand what's happening (or what isn't). That is called tracing, and that's where AWS X-Ray comes in.

Enabling X-Ray for your Lambda functions is easy: in the AWS Lambda console, just check the "Enable active tracing" box. Once enabled, you'll start seeing trace data in the X-Ray console, visualizing how your function interacts with other AWS services and resources. If you're seeing too much data (or rather, paying too much for it), you can enable sampling, so you only trace, say, 10% of the invocations.

You can also use the X-Ray SDK to add more granular data to those traces. I wrote an article about that, titled Observability With AWS X-Ray.

Screenshot of a segment in AWS X-Ray

Configuring AWS Lambda Concurrency

Your AWS Lambda functions run on execution environments that are created as needed, reused whenever possible, and destroyed after they haven't been used for a while (at least 15 minutes after the last use, according to unofficial sources). Provisioned Concurrency and Reserved Concurrency are two settings that affect the number of execution environments for a Lambda function. I wrote a bit about them on Architecting with AWS Lambda: Architecture Concerns, but let me explain further.

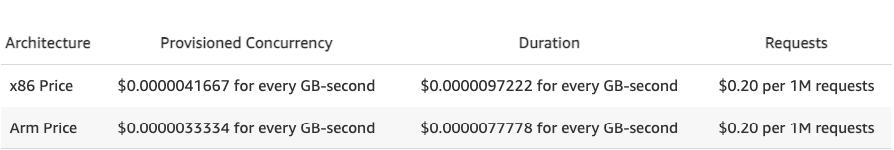

Provisioned Concurrency

Provisioned Concurrency tells the AWS Lambda service to keep a certain number of execution environments already running (aka pre-warmed), ensuring that they're available without any cold-start delays. When the function is triggered, a new execution is started, which can be run on one of these execution environments without waiting for a cold start.

If we compare AWS Lambda to an ECS or EKS (Kubernetes) cluster running on Amazon EC2, we can think of execution environments as the underlying EC2 instances created by the auto scaling group. By default, Lambda will set a minimum of 0 instances, so if there are no executions running, the auto scaler will terminate all instances (i.e. execution environments) and scale down to 0. If a request arrives then, the execution that handles it (which would be the container in our ECS/EKS analogy) will need to wait for an execution environment (EC2 instance or Kubernetes node in our analogy) to start. Provisioned Concurrency sets that minimum to a number other than 0, so there will always be a minimum of execution environments already running, even when the system is at 0 load.

Bar chart of response times of an AWS Lambda function with and without Provisioned Concurrency

When Provisioned Concurrency is enabled, you need to pay for those execution environments that are always running, at a rate of $0.0000041667 (x86) or $0.0000033334 (arm) for every GB-second that is provisioned. Executions on those environments are billed at a rate of approximately 60% of the standard rate. Here's the pricing.

Price of AWS Lambda Provisioned Concurrency

If your function receives more requests than your provisioned concurrency environments can handle, it will create additional execution environments to serve those requests. Executions that are handled by those additional environments beyond the ones provisioned by provisioned concurrency are billed at the standard rate for AWS Lambda, not at the rate of provisioned concurrency.

Pro tip: If you need to eliminate cold starts for a certain baseline traffic, enable provisioned concurrency. If you're fine with cold starts but you have a baseline of traffic and you want to pay less for it, you're looking for Compute Savings Plans for AWS Lambda.

Reserved Concurrency

AWS Lambda has an account-wide soft limit (quota) of 1000 execution environments, shared with all Lambda functions. Reserved Concurrency sets a limit on how many execution environments a Lambda function can have, and it also reserves a part of that account-wide limit for that particular function. When you enable Reserved Concurrency, you're guaranteed that the function will always have that portion of the quota available to it. For example, if you set the concurrency limit to 100, then all your other functions combined will only be able to use a total of 900 execution environments, and this function will be able to use up to 100 environments. Additionally (or possibly However), this function won't be able to use more than 100 execution environments.

Reserved Concurrency can be combined with Provisioned Concurrency to limit the number of execution environments to those that you've pre-provisioned. Or they can be used separately.

A great use of reserved concurrency is as a guardrail against spikes caused by a bug such as an infinite invocation loop. In an infinite invocation loop, an AWS Lambda function performs an action that makes it trigger itself, for example writing to an S3 bucket which is the trigger for that same function. This leads to the function being invoked infinitely, up to 1000 executions at a time (account-wide limit). If you set provisioned concurrency to a low value such as 10, the billing spike resulting from that error will be just 1% of what it can be without that limit.

Conclusion

This marks the end of the Architecting with AWS Lambda series. Part 1 took us through serverless concepts and considerations, from an architecture point of view. Part 2 put those concepts and considerations to practice, where we architected and designed a serverless application using AWS Lambda. Part 3 (this one) was about best practices that you or your teams can use to build better software using AWS Lambda.

Neither this article nor the whole series is meant to be a complete course on serverless. It is, however, meant to serve as a source of knowledge and prescriptive guidance to architect better applications using serverless in general and AWS Lambda in particular. I hope you learned something new, and ideally something useful. But most of all, I hope you enjoyed it!