AWS Lambda is a serverless computing platform offered by Amazon Web Services (AWS) that lets you run code without needing to worry about servers. But you already knew that! In this article we'll dive a lot deeper into Lambda, and talk about architecting solutions with Lambda.

When Should I Use AWS Lambda?

You can use Lambda to build simple or complex applications. The main advantage is that you can deploy services independently, whether it's 3 or 300 services. These services can respond to HTTP requests, or be part of an event-driven architecture. You can even create microservices! (You don't have to though). Lambda is ideal for services that need to scale out really fast, those that need to scale down to 0, and even for tasks that run infrequently like automating cloud operations.

Serverless Microservices with AWS Lambda

I've already written a lot about microservices in Microservices in AWS: Migrating from a Monolith. The short of the long is that microservices are like regular services, but split across a bounded context, which includes the data. You don't need to do microservices just because you're using Lambda, and using Lambda is not enough to turn your services into microservices. 99% of applications do not need microservices though, so don't lose sleep over them.

Specifically about Lambda and microservices, the biggest benefit of using Lambda for microservices is that scaling each microservice independently (which is a requirement for microservices) requires very little work, since Lambda does this automatically. Compared with serverful microservices (e.g. something running in an EC2 instance), it's much easier to do microservices in Lambda. That said, microservices are never actually easy, even with Lambda.

Event-Driven Architectures with AWS Lambda

Event-driven architectures are composed of components communicating by emitting events and reacting to events. With AWS Lambda, you can trigger functions in response to events from several AWS services, like S3, DynamoDB, or Amazon SNS. Since this is the default way Lambda works, it's really easy to configure the event-driven part using AWS Lambda. However, even though the infrastructure part is greatly simplified, event-driven architectures are still significantly more complex than "regular", request/response architectures.

Let's assume you're dealing with event-driven architectures though. Lambda's trigger options let you subscribe a Lambda function to almost anything, either directly or through an SNS topic. Additionally, Lambda functions can scale down to 0, and if an event is emitted, the AWS Lambda service will hold a copy of that event while the execution environment of your function starts up, in a similar fashion to what an SQS queue would do, but automatic and free.

To handle complex workflows in response to events, you can use AWS Step Functions to orchestrate multiple Lambda functions and other AWS services. Furthermore, you can connect Lambda functions to Kinesis Data Streams for real-time processing of event streams.

Overall, event-driven architectures are really complex to design and maintain, and they're not the best option for most use cases. However, if you've decided they're the best option for your case, AWS Lambda makes the implementation (not the design) a lot easier.

Using AWS Lambda to Automate Cloud Operations

You can use a Lambda function to run automation code that performs cloud operations, possibly triggered by events emitted by AWS EventBridge. AWS Lambda helps you in two ways:

First, it makes the triggering of the function code really easy, since Lambda natively integrates with EventBridge.

Moreover, you only pay for the time the function is actually running, which is important when you have dozens of functions that only run once or twice a day each.

You can integrate Lambda with AWS Config to automate compliance checks and remediation actions, with AWS CloudWatch Alarms to automate responses to alarms, with AWS Security Hub to automate responses to security incidents, and with just about anything that you can detect in your AWS accounts or your workloads.

Serverless Architectures Explained

Serverless architectures mean creating and managing servers is not your responsibility. There are still servers, but they're abstracted away from you. You still need to understand several fine details of this abstraction, but it's still easier than managing servers manually. This lets you focus more on designing and writing your application code, and a bit less on the infrastructure.

The three key points of serverless are:

There are servers, you don't manage them. You manage abstractions on top of them.

You don't need to implement scalability, things scale in and out automatically (you can often configure it).

In AWS, anything serverless is highly available (i.e. multi-AZ).

And if I may add a fourth one: Serverless doesn't mean microservices, or event-driven, or any other software architecture pattern.

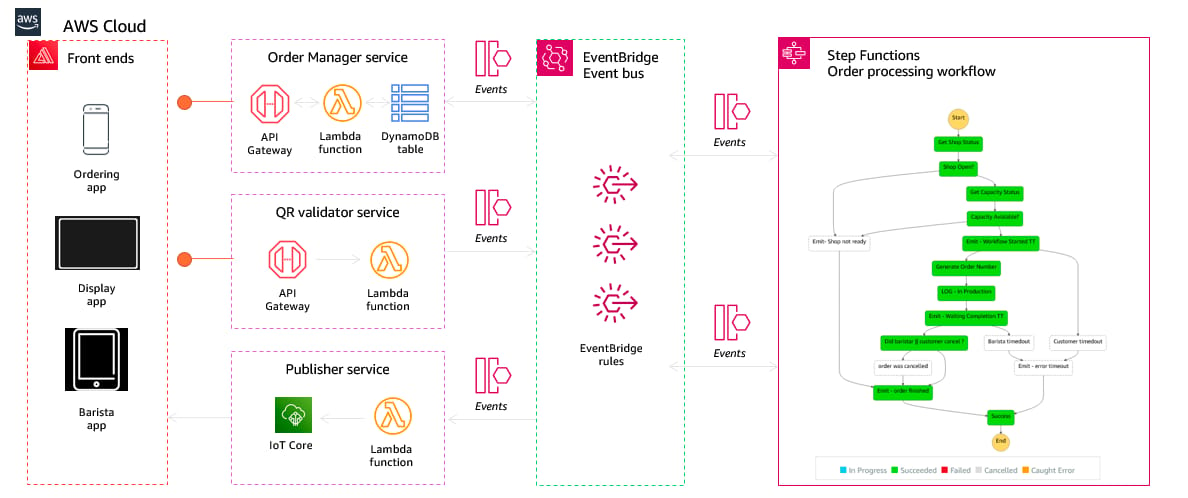

This is a complex serverless architecture, from the Serverlespresso workshop. You don't need something as complex as this.

This article is centered around AWS Lambda, but let's talk a bit about serverless in general.

Serverless Compute

Are you ready to embrace the future of computing? Look no further than AWS Lambda, the revolutionary serverless computing service offered by Amazon Web Services (AWS).

Yeah, no, I'm kidding. That's ChatGPT, we don't do that here.

Serverless compute means compute abstractions on top of servers (which were already abstractions, since they're virtual). In AWS you have two serverless compute services:

AWS Lambda: You create functions that contain your code and configurations. They are instantiated on every invocation, and run until the invocation finishes. You set the memory, and pay per time the function is running, in GB-seconds.

AWS Fargate: A serverless capacity provider for Amazon ECS and Amazon EKS, where your containers are deployed in shared servers managed by AWS, and you only pay for the computing resources (CPU and memory) consumed by your containers (as reserved by ECS or Kubernetes respectively).

Serverless Databases

There are 5 serverless database services in AWS:

Amazon DynamoDB: A NoSQL database that supports transactions, scales automatically, and can be used in event-driven architectures. All of those are links to articles I wrote, and I also wrote one and delivered a presentation on designing data in DynamoDB.

Amazon Aurora Serverless: It's the serverless deployment option of Amazon Aurora, a relational database service compatible with MySQL and PostgreSQL. Aurora Serverless can scale horizontally, and even scale down to zero capacity, though the scaling isn't instantaneous, but rather more akin to somehow running Aurora in an auto-scaling group.

Amazon Redshift Serverless: Redshift is a data warehouse suitable for analytics. Redshift Serverless is the serverless deployment option, very similar to what Aurora Serverless is to Aurora.

Amazon Nepture Serverless: Neptune is a graph database service, scalable but running on servers (EC2 instances, actually). Neptune Serverless is the serverless version of Neptune.

Amazon OpenSearch Serverless: OpenSearch is Amazon's version of ElasticSearch, after ElasticSearch close-sourced their code in early 2021, which came after nearly 5 years of litigation because of AWS's managed ElasticSearch service. OpenSearch Serverless is just the serverless version of OpenSearch.

How does AWS Lambda Function?

AWS Lambda functions are units of code deployment, each with its own separate configuration, and each scaling independently. They are usually structured around behavior, or around data access. They should have a single responsibility, but that doesn't mean responding to just one HTTP verb. For example, you might want to aggregate all CRUD operations on an entity in the same Lambda function. Another one might deal with a business transaction. Overall, don't feel limited by the AWS Lambda service, but instead organize your code around components that will be deployed separately, ignoring for a moment that you'll use Lambda to deploy them.

PS: That title was 100% intentional

AWS Lambda Runtime Configurations

The first element of the runtime configuration for a Lambda function is the language. You can use Node.js, Python, Java. .NET, Go, Ruby, or a Docker container. You can also set the Instruction set architecture, to x86_64 (Intel processors) or arm64 (Amazon's Graviton processors).

Next up you configure the resources available to your Lambda function. You set a value for Memory of up to 10 GB, and you get a certain number of vCPUs respective to that amount of memory, which follows this table that was given at an official presentation on re:Invent and yet can't be found anywhere in the AWS official documentation 😡.

You also set the name of the function, the handler (which is like the entry point that's called when the function is invoked), and a few other things that I'll expand on below.

Lambda Execution Environments

You configure a trigger for your Lambda function, such as API Gateway, SNS or DynamoDB, which is the event source of the function. When that source sends an Event to the AWS Lambda service, a new invocation of your Lambda function is started.

Think of an invocation as an execution instance of your function. Invocations are allocated an execution environment, which is like a virtual machine where the invocation runs.

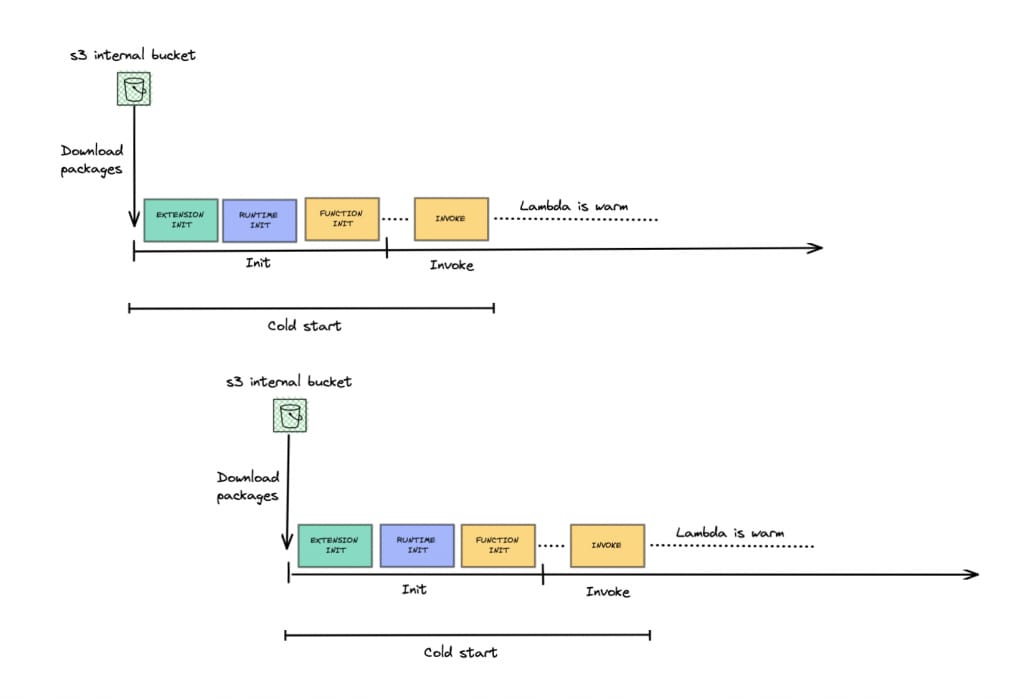

When there are no execution environments available, a new one is created, which is what we call a cold start. If there are execution environments available, they are reused for other invocations of the Lambda function, in what we call a warm start.

Cold start of a Lambda function

The lifecycle of these execution environments is not under your control. There's no guarantee that they will be kept alive, and the only way for you to influence that is by triggering periodic executions, but even then there's no guarantee.

When a cold start occurs, all the code outside of the handler, called initialization code, is executed. You aren't billed for this execution. After that, the invocation starts, and billing starts and runs until the invocation finishes. Future invocations on that same execution environment won't run the initialization code again.

Permissions for AWS Lambda

Each Lambda function has its own IAM role, with associated IAM policies. This lets you control the AWS permissions a Lambda function has, just like with an Instance Role for an EC2 Instance.

As always, remember to follow the least privilege principle when assigning permissions. This means giving the function only the permissions it needs to do its job, and nothing more. This includes only giving it permissions to the resources it needs to access, and avoiding * permissions if possible.

AWS Lambda Networking

By default, Lambda functions run in a shared VPC controlled by AWS, which has internet access and access to all public AWS services (that is, AWS services that don't run in a VPC, like S3 or SNS).

You can configure a Lambda function to run inside your own VPC, which associates them with an Elastic Network Interface (ENI) with a private IP address. This allows the Lambda function to access private resources in that VPC, such as RDS instances.

That ENI only has a private IP address, not a public IP address. That means if your Lambda function needs internet access, you'll need to set up a NAT Gateway, even if you're configuring your Lambda function to use a public subnet.

You can assign security groups to your Lambda function's ENI, just like with any other VPC resource (in case you didn't know, Security Groups are actually associated with ENIs in every case). Furthermore, traffic in your VPCs is filtered by your Network ACLs.

All of this means that each of your Lambda function's execution environments will effectively work like an EC2 instance, at least in terms of networking.

Provisioned Concurrency and Reserved Concurrency

By default, Lambda functions have a maximum limit of 1000 concurrent executions, which is shared across all functions in an AWS region. If you need to increase this limit, you can submit a quota increase request.

You have the option to use Reserved Concurrency to allocate a portion of the shared concurrency limit to a specific function. This ensures that part of the limit is reserved for that function, and it limits that function's concurrency to the value you set for reserved concurrency.

Don't confuse Reserved Concurrency with Provisioned Concurrency though. Provisioned Concurrency is a separate setting that can be used to keep a minimum number of execution environments running continuously. It doesn't impose any limit on the maximum concurrency of a function, even though the name is similar and easy to mix up. When you set Provisioned Concurrency, you're billed separately for those execution environments that are kept continuously running, even if there are no invocations.

Provisioned Concurrency can be combined with Reserved Concurrency. They're separate things, I just grouped them together in this section because it's easy to mix them up (I've done it, several times...)

AWS Lambda and Amazon API Gateway

In its most basic form, API Gateway lets you invoke Lambda functions with HTTP requests. You can define routes and methods, such as GET /orders, and map them to the same or different Lambda functions. So, for example, you could have endpoints for GET /orders, PUT /orders, POST /orders and DELETE /orders all mapped to the same Lambda function, or each to a different function.

Unless you're using an event-driven architecture, most of your Lambda functions will probably be invoked via API Gateway. But API Gateway is not just a front door to Lambda.

API Gateway can handle authentication, TLS termination (converting HTTPS to HTTP), API keys with usage quotas, transformations of the request and response, monitoring, and more. To learn more, check out Monitor and Secure Serverless Endpoints with Amazon API Gateway.

When Not to Use AWS Lambda or Serverless

Architecture decisions depend on your specific requirements and use cases, and serverless is no exception. Sometimes it's the best option, sometimes it isn't. For example, long-running tasks or processes aren't suitable for Lambda functions, since their execution time is capped at 15 minutes.

One of the big advantages of serverless applications in general (not just Lambda) is that it scales really fast. That makes it a great choice for workloads with big traffic spikes, where an auto scaling group just won't scale fast enough. Conversely, workloads with predictable and consistent traffic patterns will be more expensive to run on any serverless configuration, be it for compute or data.

An additional aspect that you need to keep in mind when architecting solutions is familiarity with technologies. A development team with a lot of serverless experience will move really fast on serverless, and most if not all serverless experts will build PoCs much faster on serverless. However, devs who are unfamiliar with serverless architectures in general and with Lambda functions in particular will end up getting slowed down by the myriad of details that they otherwise just let a cloud engineer handle, and that they must now handle themselves when using serverless. Training can go a long way, but it can also take some time. This isn't a comment for or against serverless, just architecture advice.

Common Issues with AWS Lambda

Lambda is great, but (like any technology) it comes with its own set of challenges. First, there's the issue of cold starts, which can introduce latency during the initial execution of a Lambda function, which you can't predict. You can play around with execution environments, but you can't reliably eliminate cold starts, other than with Provisioned Concurrency. Provisioned concurrency works great in this regard, but you've changed the billing model from per second of execution to per month of provisioned capacity, since you're effectively provisioning environments.

Managing dependencies and deployment artifacts is another aspect that can become challenging. Compared to a monolith, now you're dealing with one artifact per function, with separate dependencies that need to be kept track of and updated. You can use Lambda Layers to manage shared dependencies, but even in that case, with multiple functions you'll end up combining several layers, which can quickly turn into a dependency Megazord. It's not an impossible problem, but it is hard.

Debugging and troubleshooting can also be more complex in a serverless environment. You can't SSH into anything (you shouldn't do that anyways), so you need to export all the information, such as logs and metrics. Moreover, you'll more often than not have a lot of Lambda functions, and cross-referencing logs can turn into detective work. You can use AWS X-Ray to make this easier, but it's still a common pitfall.

Disadvantages of Serverless Architectures

You know I don't usually consider vendor lock-in a problem, but honestly you can't get much more vendor locked in than with serverless.

Additionally, certain programming languages or frameworks may have limited support in serverless architectures, such as Rust not being natively supported by AWS Lambda. You can get around this by using Docker, but it adds yet another layer of virtualization, and a bit of complexity.

Serverless doesn't strictly mean event-driven, but in reality sometimes you start with serverless and end up with event-driven workflows, which can get rather complex.

I don't mean to put you off serverless, but it's very important that you understand the disadvantages, as much as the advantages.

This is part 1 of a three-part series. Read part 2: Architecting with AWS Lambda: Architecture Design.