Batch computing is how we process things when there’s no need for immediate interactive responses. Instead of spinning up compute capacity on demand, we gather tasks and process them asynchronously, typically at a moment that suits our operational or business schedule (which really means whenever it's cheaper). It's pretty straightforward: queue some jobs, run them whenever, collect results later. As usual, the devil is in the details. AWS Batch can keep you away from the devil by offering a managed orchestration layer for containerized batch workloads, which is awesome! In fact, I already wrote a deep dive on AWS Batch.

In this article I'd like to examine an advanced AWS Batch reference architecture, so we can take the discussion beyond the complex features and into how they play out in the real world. I'll show you multi-step pipelines, concurrency controls, HPC (high-performance computing) features, cost-saving strategies, job orchestration patterns, network isolation, advanced security measures, monitoring, logging, and all that stuff.

Conceptual Overview and Goals

Let’s begin with a mental model of what we’re trying to achieve. AWS Batch is a fully managed service that abstracts away the complexity of provisioning compute resources for asynchronous workloads. Jobs run in compute environments, are queued in job queues, and are configured using job definitions.

When a job is submitted, AWS Batch pulls it from the job queue, finds a suitable compute environment, provisions resources if needed, and schedules the job to run. Well, at least that's the happy path, which works for 80% of use cases. If you read Simple AWS I'm guessing you're the person they call on for the other 20%, so let's talk about the hard stuff.

Here’s how we're going to use those features in a real pipeline:

Data Ingestion: CSV files land in an S3 bucket, typically once per day.

Pre-Processing: A job cleans up and validates each CSV, storing intermediate outputs in another S3 location.

HPC Analysis: We run a multi-node parallel job that uses GPU-accelerated instances to perform data-intensive computations.

Post-Processing: A final job merges the HPC results, updates DynamoDB, and sends a Slack notification.

It sound straightforward, but there’s a lot of details in there: specialized HPC compute, custom AMIs (optionally, I won't include them in this example but you can add them), ephemeral storage for large scratch space, Spot Instances for cost savings, and job dependencies or external orchestration for multi-step logic. And of course we need logging and monitoring, and at least half decent security.

I'll show you how each of these topics works, then I'll give you a CloudFormation template to show you how to deploy this.

Brief AWS Batch Recap

I won't bore you with the basics, here's my previous AWS Batch Deep Dive for that (not to bore you, hopefully!). Instead, let me set the stage for the rest of this article by refreshing a couple of advanced things:

Multiple Compute Environments for Different Workloads

In large-scale setups, we often create multiple compute environments. One might be optimized for On-Demand instances for critical or latency-sensitive jobs, another might use Spot Instances for cost-savings on non-urgent tasks, and yet another might be Fargate-based for smaller ephemeral jobs that require minimal overhead. AWS Batch allows you to attach multiple compute environments to a single job queue, using priorities or other scheduling logic to decide which environment is used.

AWS Batch provides an advanced scheduling policy called fair-share. This feature is valuable when multiple teams or users share a single queue. You can assign “share identifiers” to each user or workload, and AWS Batch ensures that resources are distributed fairly according to assigned weights. This prevents one job from hogging the entire cluster, which is particularly useful in HPC or multi-tenant environments.

Multi-Node Parallel Jobs

Beyond standard single-container tasks, AWS Batch supports multi-node parallel jobs that can span multiple EC2 instances for tightly coupled, parallel workloads. This is often used for HPC tasks like MPI-based simulations or large-scale machine learning training. When you enable multi-node parallel, AWS Batch provisions a set of instances that function together as a mini-cluster, typically placed in a cluster placement group for high-bandwidth networking. We’ll see how that fits into a real scenario.

Extensive Retries and Dependencies

Jobs can depend on other jobs’ success. A single job might wait for multiple upstream jobs to complete before it starts. You can also configure complex retry behavior, distinguishing between certain failure states (for example you might retry if the job was interrupted by Spot reclamation, but not if the application itself returned an error that indicates a code bug). Not as powerful as Step Functions, but pretty close, and more than enough for most situations.

Batch Architecture Overview: Multi-Step Pipeline

For our scenario we'll imagine we have a multi-step data processing pipeline that handles daily data ingestion, transformation, optional HPC-style analytics, and final post-processing. Our pipeline is designed around an end-to-end flow:

Data Ingestion

A scheduled or external process uploads CSV files to an “incoming” Amazon S3 bucket.

Pre-Processing

A single-container job reads each CSV, performs data validation (removing incomplete rows or normalizing columns), and writes the cleaned result to an “intermediate” S3 bucket.

HPC Analysis

A multi-node job uses GPU-accelerated instances (for example p5 instances) to perform CPU- or GPU-intensive computations on the cleaned data. It might be training a machine learning model or running a simulation, generating partial outputs in either S3 or a shared file system like FSx for Lustre.

Post-Processing

Another job merges partial HPC results, updates a DynamoDB table or data warehouse, and sends a Slack notification that the day’s run is complete.

We’ll set up three compute environments:

High-Priority On-Demand (for tasks that must never be interrupted).

Spot Environment (for workloads that tolerate interruptions).

HPC Environment (GPU-optimized, possibly with multi-node parallel support).

We’ll also create two job queues:

Main Queue: Points to On-Demand first, then Spot as fallback. This handles all “normal” single-container tasks like data ingestion and post-processing.

HPC Queue: Points only to the HPC environment. This is for large HPC or GPU tasks.

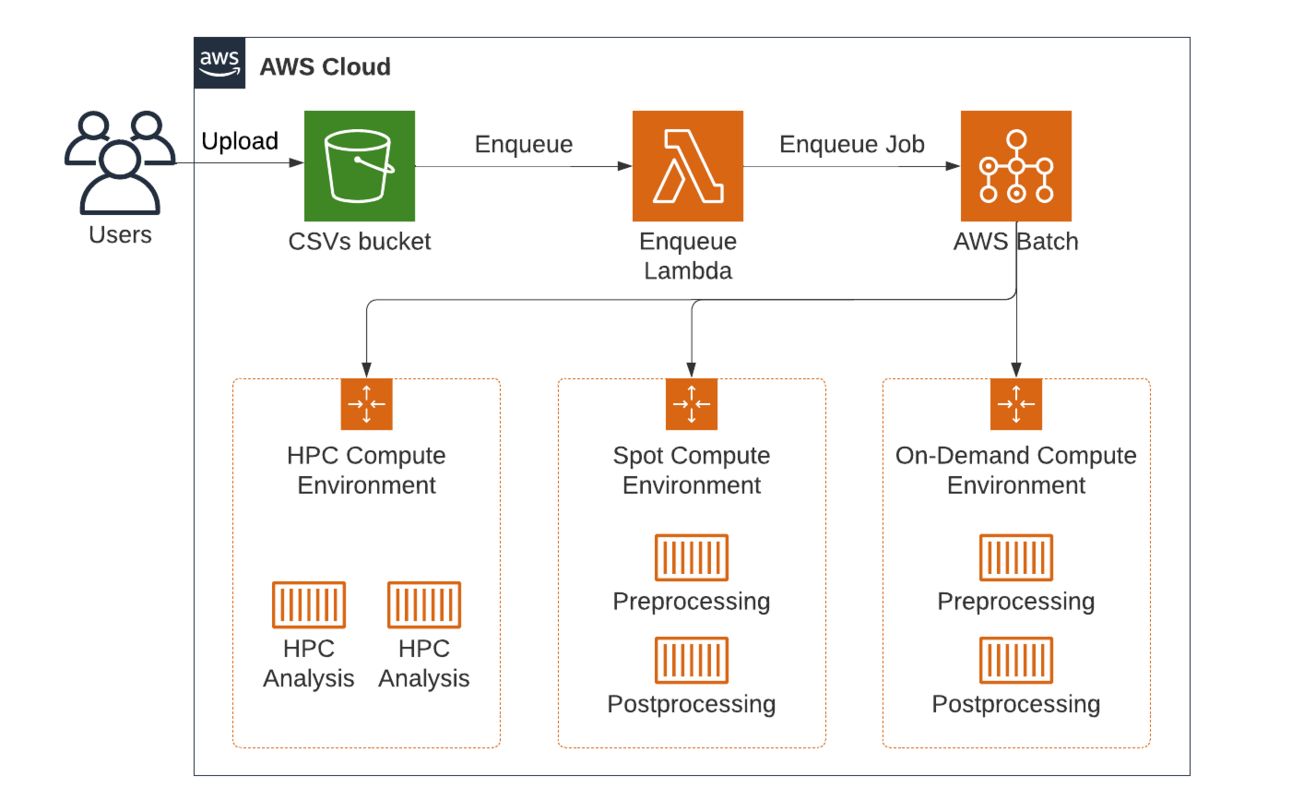

Architecture diagram of AWS Batch with 3 compute environments

Batch Compute Environments and Job Queues

Multiple Environments for Different Purposes

A single AWS Batch compute environment can handle many scenarios, but dividing them based on workload or cost strategy gives you better control. We'll use three compute environments:

High-Priority On-Demand Environment

This environment is used for time-sensitive or critical jobs. We set it up with On-Demand instances only, so tasks here never risk interruption from a Spot termination. We can use instance types with a good balance of CPU, memory, and network throughput, likemandcfamilies.Spot-Optimized Environment

This environment is for workloads that tolerate interruptions and some wait times. We can use a wide range of instance types, all of them on Spot Instances. We also use the allocation strategySPOT_CAPACITY_OPTIMIZEDso AWS Batch tries to select instance pools less likely to be reclaimed. This environment is just plain cheaper.HPC Environment with Multi-Node Support

Our HPC environment will use specialized instance types like p5 (with GPUs) for machine learning workloads. We enable multi-node parallel job support here, so large tasks that need multiple nodes can run seamlessly. We could also include cluster placement groups in the configuration if we need low-latency networking. Elastic Fabric Adapter (EFA) also helps with latency.

Each environment needs to be assigned an AWS Batch service role that allows the service to manage EC2 instances in our account. We also configure networking (subnets, security groups) carefully. Since we want all jobs to run in private subnets, we need to make sure we have all the VPC endpoints we need, for S3 and other AWS services.

Job Queues and Their Priorities

We'll create two job queues:

Main Queue: High priority. This queue references both the On-Demand environment (with the highest order in

ComputeEnvironmentOrder) and the Spot environment as a secondary. When a job enters this queue, AWS Batch first attempts to run it on On-Demand. If that environment is fully utilized or hits its max vCPU limit, it the job will spill over to the Spot environment if it's marked as “Spot-friendly.”HPC Queue: Dedicated for multi-node GPU-based tasks. This queue is mapped only to the HPC environment.

At this stage we have a logical separation: critical jobs go to the main queue and can use On-Demand, while HPC tasks go to the HPC queue. Everything else that’s flexible about interruptions can run on Spot.

Deploying the Environment with CloudFormation

Below is an example CloudFormation template that demonstrates many of these concepts. It creates:

A simple VPC with two private subnets and a NAT Gateway.

IAM roles for AWS Batch.

Three Compute Environments: On-Demand, Spot, HPC.

Two Job Queues: Main and HPC.

Three sample Job Definitions (pre-processing, HPC, post-processing) with placeholders for container images and commands.

This template is intended as a starting point, so you might add details (like cluster placement groups or EFA) later. The template follows best practices, but it's a bit condensed for readability. In production you’d likely store parameters (like container repository URIs) in a Parameter Store or pass them as CloudFormation parameters

AWSTemplateFormatVersion: "2010-09-09"

Description: "AWS Batch Reference Architecture with On-Demand, Spot, HPC Environments"

Parameters:

VpcCidr:

Type: String

Default: "10.0.0.0/16"

Description: "CIDR block for the VPC"

PreprocessorImage:

Type: String

Default: "123456789012.dkr.ecr.us-east-1.amazonaws.com/data-preprocessor:latest"

HpcAnalysisImage:

Type: String

Default: "123456789012.dkr.ecr.us-east-1.amazonaws.com/hpc-analysis:gpu-latest"

PostprocessorImage:

Type: String

Default: "123456789012.dkr.ecr.us-east-1.amazonaws.com/data-postprocessor:latest"

Resources:

VPC:

Type: AWS::EC2::VPC

Properties:

CidrBlock: !Ref VpcCidr

EnableDnsSupport: true

EnableDnsHostnames: true

Tags:

- Key: Name

Value: "BatchRefArchVPC"

InternetGateway:

Type: AWS::EC2::InternetGateway

AttachInternetGateway:

Type: AWS::EC2::VPCGatewayAttachment

Properties:

VpcId: !Ref VPC

InternetGatewayId: !Ref InternetGateway

PublicSubnet1:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

CidrBlock: "10.0.0.0/24"

AvailabilityZone: !Select [0, !GetAZs ""]

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: "BatchRefArchPublicSubnet1"

PublicRouteTable:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref VPC

PublicRoute:

Type: AWS::EC2::Route

Properties:

RouteTableId: !Ref PublicRouteTable

DestinationCidrBlock: "0.0.0.0/0"

GatewayId: !Ref InternetGateway

PublicSubnet1RouteTableAssoc:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

SubnetId: !Ref PublicSubnet1

RouteTableId: !Ref PublicRouteTable

NatEIP:

Type: AWS::EC2::EIP

Properties:

Domain: VPC

NatGateway:

Type: AWS::EC2::NatGateway

Properties:

AllocationId: !GetAtt NatEIP.AllocationId

SubnetId: !Ref PublicSubnet1

PrivateSubnet1:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

CidrBlock: "10.0.1.0/24"

AvailabilityZone: !Select [1, !GetAZs ""]

MapPublicIpOnLaunch: false

Tags:

- Key: Name

Value: "BatchRefArchPrivateSubnet1"

PrivateRouteTable:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref VPC

PrivateSubnet1RouteTableAssoc:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

SubnetId: !Ref PrivateSubnet1

RouteTableId: !Ref PrivateRouteTable

PrivateRoute:

Type: AWS::EC2::Route

Properties:

RouteTableId: !Ref PrivateRouteTable

DestinationCidrBlock: "0.0.0.0/0"

NatGatewayId: !Ref NatGateway

BatchSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: "Security Group for AWS Batch Instances"

VpcId: !Ref VPC

SecurityGroupIngress:

- IpProtocol: -1

CidrIp: "10.0.0.0/16"

Tags:

- Key: Name

Value: "BatchRefArchSG"

BatchServiceRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service: "batch.amazonaws.com"

Action: "sts:AssumeRole"

ManagedPolicyArns:

- "arn:aws:iam::aws:policy/service-role/AWSBatchServiceRole"

Tags:

- Key: Name

Value: "BatchServiceRole"

BatchInstanceRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service: "ec2.amazonaws.com"

Action: "sts:AssumeRole"

ManagedPolicyArns:

- "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

- "arn:aws:iam::aws:policy/CloudWatchAgentServerPolicy"

Path: "/"

Tags:

- Key: Name

Value: "BatchInstanceRole"

BatchInstanceProfile:

Type: AWS::IAM::InstanceProfile

Properties:

Roles:

- !Ref BatchInstanceRole

InstanceProfileName: "BatchInstanceProfile"

BatchJobRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service: "ecs-tasks.amazonaws.com"

Action: "sts:AssumeRole"

Path: "/"

ManagedPolicyArns:

- "arn:aws:iam::aws:policy/AmazonS3FullAccess"

- "arn:aws:iam::aws:policy/AmazonDynamoDBFullAccess"

Tags:

- Key: Name

Value: "BatchJobRole"

SpotFleetRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service: "spotfleet.amazonaws.com"

Action: "sts:AssumeRole"

ManagedPolicyArns:

- "arn:aws:iam::aws:policy/service-role/AmazonEC2SpotFleetTaggingRole"

OnDemandComputeEnv:

Type: AWS::Batch::ComputeEnvironment

Properties:

ComputeEnvironmentName: "OnDemandCE"

Type: MANAGED

ServiceRole: !GetAtt BatchServiceRole.Arn

ComputeResources:

Type: EC2

AllocationStrategy: BEST_FIT_PROGRESSIVE

MinvCpus: 0

MaxvCpus: 32

DesiredvCpus: 0

InstanceTypes:

- "m5.xlarge"

- "c5.xlarge"

Subnets:

- !Ref PrivateSubnet1

SecurityGroupIds:

- !Ref BatchSecurityGroup

InstanceRole: !GetAtt BatchInstanceProfile.Arn

Tags:

Name: "OnDemandCEInstances"

State: ENABLED

SpotComputeEnv:

Type: AWS::Batch::ComputeEnvironment

Properties:

ComputeEnvironmentName: "SpotCE"

Type: MANAGED

ServiceRole: !GetAtt BatchServiceRole.Arn

ComputeResources:

Type: EC2

AllocationStrategy: SPOT_CAPACITY_OPTIMIZED

MinvCpus: 0

MaxvCpus: 64

DesiredvCpus: 0

InstanceTypes:

- "m5.large"

- "c5.large"

- "r5.large"

Subnets:

- !Ref PrivateSubnet1

SecurityGroupIds:

- !Ref BatchSecurityGroup

InstanceRole: !GetAtt BatchInstanceProfile.Arn

SpotIamFleetRole: !GetAtt SpotFleetRole.Arn

Tags:

Name: "SpotCEInstances"

State: ENABLED

HPCComputeEnv:

Type: AWS::Batch::ComputeEnvironment

Properties:

ComputeEnvironmentName: "HPCCE"

Type: MANAGED

ServiceRole: !GetAtt BatchServiceRole.Arn

ComputeResources:

Type: EC2

AllocationStrategy: BEST_FIT_PROGRESSIVE

MinvCpus: 0

MaxvCpus: 64

DesiredvCpus: 0

InstanceTypes:

- "p5.48xlarge"

Subnets:

- !Ref PrivateSubnet1

SecurityGroupIds:

- !Ref BatchSecurityGroup

InstanceRole: !GetAtt BatchInstanceProfile.Arn

Tags:

Name: "HPCCEInstances"

State: ENABLED

MainJobQueue:

Type: AWS::Batch::JobQueue

Properties:

JobQueueName: "MainQueue"

Priority: 10

ComputeEnvironmentOrder:

- Order: 1

ComputeEnvironment: !Ref OnDemandComputeEnv

- Order: 2

ComputeEnvironment: !Ref SpotComputeEnv

State: ENABLED

HPCJobQueue:

Type: AWS::Batch::JobQueue

Properties:

JobQueueName: "HPCQueue"

Priority: 5

ComputeEnvironmentOrder:

- Order: 1

ComputeEnvironment: !Ref HPCComputeEnv

State: ENABLED

PreprocessingJobDef:

Type: AWS::Batch::JobDefinition

Properties:

JobDefinitionName: "preprocessing-job-def"

Type: "container"

ContainerProperties:

Image: !Ref PreprocessorImage

Vcpus: 2

Memory: 4096

Command:

- "python"

- "/app/preprocess.py"

JobRoleArn: !Ref BatchJobRole

RetryStrategy:

Attempts: 3

Timeout:

AttemptDurationSeconds: 3600

HPCAnalysisJobDef:

Type: AWS::Batch::JobDefinition

Properties:

JobDefinitionName: "hpc-analysis-job-def"

Type: "multinode"

NodeProperties:

NumNodes: 4

MainNode: 0

NodeRangeProperties:

- TargetNodes: "0:3"

Container:

Image: !Ref HpcAnalysisImage

Vcpus: 32

Memory: 122880

Command:

- "mpirun"

- "/app/hpc_analysis.sh"

JobRoleArn: !Ref BatchJobRole

RetryStrategy:

Attempts: 2

Timeout:

AttemptDurationSeconds: 86400

PostprocessingJobDef:

Type: AWS::Batch::JobDefinition

Properties:

JobDefinitionName: "postprocessing-job-def"

Type: "container"

ContainerProperties:

Image: !Ref PostprocessorImage

Vcpus: 2

Memory: 4096

Command:

- "python"

- "/app/postprocess.py"

JobRoleArn: !Ref BatchJobRole

RetryStrategy:

Attempts: 1

Timeout:

AttemptDurationSeconds: 7200

Outputs:

MainQueueName:

Description: "Name of the main AWS Batch queue"

Value: !Ref MainJobQueue

HPCQueueName:

Description: "Name of the HPC AWS Batch queue"

Value: !Ref HPCJobQueueTemplate Highlights

Network: Creates a VPC with a public subnet (for NAT) and a private subnet (where our Batch instances run).

Security Groups: Opens all traffic within the VPC’s CIDR. For HPC or debugging, you might refine these rules.

IAM Roles:

BatchServiceRole is for the AWS Batch service to manage resources.

BatchInstanceRole is attached to the EC2 instances for ECS agent usage, logging, and ECR access.

BatchJobRole is for the containers themselves, granting them (for example) S3 and DynamoDB permissions.

Compute Environments:

OnDemandComputeEnv with

MaxvCpus: 32for critical jobs.SpotComputeEnv with

MaxvCpus: 64for cheaper runs.HPCComputeEnv to handle multi-node GPU-based tasks with up to 64 vCPUs.

Job Queues:

MainQueue references On-Demand first, then Spot.

HPCQueue references the HPC environment exclusively.

Job Definitions: Pre-Processing, HPC Analysis (multi-node), and Post-Processing. Each is minimal in this template, but you can tweak ephemeral storage, environment variables, etc.

This should give you a functional environment for your multi-step pipeline. Let's see how we use it.

Submitting and Orchestrating Jobs

Single-Queue Approach with Dependencies

You can orchestrate these tasks entirely within AWS Batch by chaining job submissions:

Submit Preprocessing Job (to

MainQueue)

aws batch submit-job \

--job-name "my-preproc-job" \

--job-queue "MainQueue" \

--job-definition "preprocessing-job-def"Suppose AWS Batch returns jobId=abc123.

Submit HPC Job (to

HPCQueue), dependent on the above:

aws batch submit-job \

--job-name "my-hpc-job" \

--job-queue "HPCQueue" \

--job-definition "hpc-analysis-job-def" \

--depends-on jobId=abc123Now the HPC job won’t start until the pre-processing job finishes successfully.

Submit Post-Processing Job (to

MainQueue) after HPC completes:

aws batch submit-job \

--job-name "my-postproc-job" \

--job-queue "MainQueue" \

--job-definition "postprocessing-job-def" \

--depends-on jobId=def456Where def456 is the HPC job’s ID.

This simple chaining of jobs is enough for linear sequences. If the HPC job fails, the post-processing job is never triggered. You can combine that with the built-in retry logic to handle certain failures automatically.

AWS Step Functions for Advanced Logic

If you need branching logic (e.g. if HPC fails you want to run a fallback job on On-Demand, or if HPC logic splits into multiple parallel tasks), it’s usually easier to manage all that in AWS Step Functions (this is basically moving from a choreographed transaction to an orchestrated transaction, see my distributed transactions article if you don't remember what those terms mean). You can define a state machine with steps like:

Step 1: Submit the pre-processing job, wait for success.

Step 2: Submit HPC, wait for success.

Step 3: Submit post-processing, wait for success.

Step 4: If it fails, do X. If it succeeds, do Y.

Step Functions can also handle partial failures or repeated HPC interruptions from Spot by re-submitting the job or switching job queues automatically. It can also notify you, update status somewhere, and anything else you need.

Cost Optimization Strategies

Use Spot for Non-Urgent Steps

The CloudFormation template sets Order: 1 for the On-Demand environment in the Main Queue, which means the job first tries On-Demand. If your job is truly flexible, you can reverse that priority or skip On-Demand entirely to keep costs down. HPC tasks can also rely on Spot if they can handle frequent interruptions—just ensure you implement checkpointing.

Diversify Instances

In the Spot environment I included c5.large, m5.large, and r5.large instance types. That’s just an example. The more instance types you allow, the more capacity pools you can tap into. This lowers the chance of Spot interruption.

Right-Sizing

Monitor how much CPU and memory your containers actually use (via CloudWatch). If they rarely exceed 50% usage, you might reduce requested resources in the job definition, letting AWS Batch fit more containers per instance and lower overall cost.

HPC vs. On-Demand

Some HPC tasks are so time-critical that you can’t risk Spot interruptions. In that case, HPC might run solely on On-Demand GPU instances. In other scenarios (like a nightly HPC job that can rerun), Spot-based HPC can offer huge savings. Evaluate your job’s tolerance for restarts or delays.

Storage, Custom AMIs, and Network Considerations

Ephemeral Storage

If your HPC or pre-processing job expands data temporarily, consider using ephemeral storage. You can specify ephemeralStorage.sizeInGiB in the job definition. Keep in mind ephemeral storage is per-container. For bigger HPC tasks that rely on shared data you might want to use FSx for Lustre or EFS, mapped as volumes in your container.

Host Volumes and Custom AMIs

If you have large Docker images (like a big HPC environment with CUDA libraries), it might be worth building a custom AMI that pre-pulls these images or pre-installs drivers. You’ll save container startup time, particularly if your job definitions request ephemeral volumes or if you run many short HPC jobs in bursts.

Network

In the template I put all compute in a single private subnet. For HPC jobs, you might want to create a separate HPC subnet or enable cluster placement groups. You can do that by editing the HPC environment’s ComputeResources block. If you need a lot more network throughput, you can use Elastic Fabric Adapter (EFA). You can set that up via a Launch Template that includes efaEnabled and an instance type that supports EFA.

Monitoring, Logging, and Debugging

CloudWatch Metrics

AWS Batch publishes metrics like RunningJobs, PendingJobs, and DesiredvCpus for each compute environment. Keep an eye on them. If you see a large backlog of PendingJobs, it might be a resource shortage or a misconfiguration in your environment. If HPC is pegged at its max vCPU, consider raising the limit or splitting tasks differently.

CloudWatch Logs

Each container’s stdout/stderr can be streamed to CloudWatch Logs. You can view logs by job ID, and for multi-node parallel jobs, each node gets its own log stream. This is often the first place to check if something fails unexpectedly. If you rely on ephemeral storage or host volumes, logs might reveal out-of-disk issues or insufficient ephemeral memory.

Alarms and Automation

You can set CloudWatch Alarms for job failures, job queue length, or HPC environment capacity. A simple approach is to alarm if the number of jobs in a queue remains above a certain threshold for X minutes. Another approach is to alarm on repeated HPC job failures, which might indicate Spot interruptions or a code bug. Combine these alarms with Amazon SNS or Slack notifications so you know quickly if your pipeline is stuck.

Putting It All Together: An Example Workflow

This is the complete flow for our example:

CSV Upload: A daily script or external tool puts a CSV file into

s3://my-ingest-bucket/data/YYYYMMDD/input.csv. That triggers a small AWS Lambda function that callsaws batch submit-jobfor thepreprocessing-job-definMainQueue.Pre-Processing

The container pullsinput.csv, processes it, writescleaned.csvtos3://my-intermediate-bucket/data/YYYYMMDD/cleaned.csv. The job finishes, returning success. Let's assume itsjobIdisabc123.HPC Analysis

We immediately submit the HPC job withdepends-on jobId=abc123. That job runs inHPCQueue, which spawns up to 4p5.48xlargeinstances (note: THAT IS A LOT OF MONEY. $98.32/hour per On Demand instance to be exact). The container script uses MPI to coordinate across nodes, reading frommy-intermediate-bucket, generating partial results inmy-hpc-outputs-bucket, and concluding after some hours (note: Now you're poor. Seriously, it's a lot of money). The HPC environment tears down the GPU instances when the job finishes, saving you from AWS-induced homelessness.Post-Processing

When the HPC job succeeds, we run a job inMainQueuewithdepends-on jobId=def456(the HPC job’s ID). It merges partial HPC outputs, updates a DynamoDB table with final metrics, and notifies Slack. If this job fails or times out, we get a CloudWatch Alarm. If it completes, we’re done for the day.Cleanup and Monitoring

The environments scale back to 0. Logs and metrics are available in CloudWatch and CloudWatch Logs.

Operational Tips and Troubleshooting for Batch

One of the biggest differences between a proof-of-concept environment and a production one is how you handle the inevitable problems:

Quotas and Limits

New AWS accounts usually have low default quotas, like 256 or 512 vCPUs for On-Demand or Spot in a region. If an HPC job tries to spin up 4 nodes each requiring 32 vCPUs, you'll quickly reach that limit. Monitor for jobs stuck in RUNNABLE state. If you see that, check your account’s service quotas.

Network Configuration

If your Batch jobs require frequent reads from S3, make sure that you use S3 VPC endpoints in each subnet so traffic never goes through a NAT gateway, or consider FSx for Lustre as a caching layer.

AMIs and ECS Agent Issues

If you're using custom AMIs, always confirm that the ECS agent is installed and that it registers to the cluster. Many times a job doesn’t start because the instance never joined the ECS cluster due to an agent misconfiguration. Double-check your user data scripts or Launch Template parameters.

Long-Running Jobs and Timeouts

If a job is supposed to run for many hours, set the job definition’s AttemptDurationSeconds to a high enough value. A default of one hour can kill your job before it completes. However, don’t let jobs run forever! If a job is stuck, it’s better to fail fast, notify, and investigate.

Final Thoughts and Next Steps

We’ve walked through an advanced AWS Batch reference architecture, complete with a CloudFormation template to stand up three compute environments, job queues, and job definitions. Our pipeline scenario demonstrated how pre-processing, HPC analysis, and post-processing tasks can flow together with either AWS Batch dependencies or an external orchestrator like Step Functions.

Key Takeaways

Separate Environments by Cost and Requirements

Use On-Demand for critical tasks, Spot for interruptible tasks, HPC for specialized GPU or large-memory nodes.Configure Dependencies and Retries

Try to keep it simple with built-in job dependencies, and use Step Functions if you need complex branching and error-handling.Optimize for Cost

Spot Instances, ephemeral storage, custom AMIs, and instance-type diversification can significantly lower your AWS bill.Monitor and Fine-Tune

Collect CloudWatch metrics and logs. Configure alarms. Evaluate resource usage and scale or refine job definitions accordingly.EFA and HPC

For truly HPC-level stuff, consider a Launch Template that enables Elastic Fabric Adapter (EFA). It really is fast.

That's it. Now go Batch happily, or something like that. I'm not good at inspirational quotes and influencer stuff.